The emergence over the past decade of synthetic genomics, a set of methods for the synthesis of entire microbial genomes from simple chemical building blocks, has elicited concerns about the potential misuse of this technology for harmful purposes. In 2002, scientists at Stony Brook University recreated the polio virus from scratch based on its published genetic sequence.[1] This demonstration prompted fears that terrorist organizations might exploit the same technique to synthesize more deadly viral agents, such the smallpox virus, as biological weapons.[2] Since then, legitimate scientists have recreated other pathogenic viruses in the laboratory, including a SARS-like virus and the formerly extinct strain of influenza virus responsible for the 1918-19 “Spanish Flu” pandemic, which is estimated to have infected a third of the world population and killed three to five percent.[3] (The scientific rationale for resurrecting the 1918 influenza virus was to gain insight into the genetic factors that made it so virulent, thereby guiding the development of antiviral drugs that would be effective against future pandemic strains of the disease.[4])

In Memoriam

The New Atlantis notes with great sadness the passing of our contributor Jonathan B. Tucker. An esteemed and prolific expert in nonproliferation policy, he was described by a close colleague as a “humble giant” of his field.

Read about the life and work of Jonathan B. Tucker here.

In assessing the risk that would-be bioterrorists could misuse synthetic genomics to recreate dangerous viruses, a central question is whether they could master the necessary technical skills. Skeptics point out that whole-genome synthesis demands multiple sets of expertise, including considerable “tacit knowledge” that cannot be transmitted in writing but must be gained through years of hands-on experience in the laboratory. Other scholars disagree, arguing that genome synthesis is subject to a process of “de-skilling,” a gradual decline in the amount of tacit knowledge required to master the technology that will eventually make it accessible to non-experts, including those with malicious intent. This debate is of more than academic interest because it is central to determining the security risks associated with the rapid progress of biological science and technology.

Sociologists of science distinguish between two types of technical knowledge: explicit and tacit. Explicit knowledge is information that can be codified, written down in the form of a recipe or laboratory protocol, and transferred from one individual to another by impersonal means, such as publication in a scientific journal. Tacit knowledge, by contrast, involves skills, know-how, and sensory cues that are vital to the successful use of a technology but that cannot be reduced to writing and must be acquired through hands-on practice and experience.[5] Scientific procedures and techniques requiring tacit knowledge do not diffuse as rapidly as those that are readily codified.

Tacit knowledge can itself be divided into two types. Personal tacit knowledge is held by individuals and can be conveyed from one person to another through a master-apprentice relationship (learning by example) or acquired by a lengthy process of trial-and-error problem solving (learning by doing). The amount of time required to gain personal tacit knowledge depends on the complexity of a task and the level of skill involved in its execution.[6] Moreover, such knowledge tends to decay if it is not practiced on a regular basis and transmitted to the next generation. Communal tacit knowledge is more complex because it is not held by a single individual but resides in an interdisciplinary team of specialists, each of whom has skills and experience that cohere into a larger scientific project or experimental protocol. This social dimension makes communal tacit knowledge particularly difficult to transfer from one laboratory to another, because doing so requires transplanting and replicating a complex set of technical practices in a new context.[7]

Field research by sociologists of science has shown that advanced biotechnologies such as whole-genome synthesis demand high levels of both personal and communal tacit knowledge. For example, Kathleen Vogel of Cornell University found that the Stony Brook researchers who synthesized the polio virus did not rely exclusively on written protocols but made extensive use of intuitive skills acquired through years of experience. Tacit knowledge was particularly important in one step of the process: preparing the cell-free extracts needed to translate the synthetic genome into infectious virus particles. If the cell-free extract was not prepared correctly by relying on subtle tricks and sensory cues, it proved impossible to reproduce the published experiment.[8]

Based on her empirical research, Vogel concludes that biotechnology is a “socio-technical assemblage” — an activity whose technical and social dimensions are inextricably linked.[9] Such factors help to explain the problems that scientists often encounter when trying to replicate a research protocol developed in another laboratory, or when translating a scientific discovery from the research bench to commercial application.[10] Despite the ongoing “revolution” in the life sciences, these traditional bottlenecks persist. Other case studies of technological innovation have confirmed the importance of the socio-technical dimension, which includes tacit knowledge, teamwork, laboratory infrastructure, and organizational factors.

In the field of whole-genome synthesis, for example, the importance of socio-technical factors continues to grow as scientists take on larger and more complex genomes. Researchers at the J. Craig Venter Institute announced in May 2010 that they had synthesized an artificial bacterial genome consisting of more than one million DNA units, a task that required a unique configuration of expertise and resources.[11] In an interview, Dr. Venter noted that at each stage in the process, a team of highly skilled and experienced molecular biologists had to develop new methodologies, which could be made to work only through a lengthy process of trial and error. For instance, because the long molecules of synthetic bacterial DNA were fragile, they had to be stored in supercoiled form inside of gel blocks and handled carefully to keep them from breaking up. “As with all things in science,” Venter explained, “it’s the little tiny breakthroughs on a daily basis that make for the big breakthrough.”[12]

Recent developments in scientific publishing also reflect the fact that the growing complexity of research tools and processes has increased the importance of tacit knowledge. One online scientific publication, the Journal of Visualized Experiments, has since 2006 used video recordings of experimental techniques to portray subtle details that cannot be captured in written form.[13] Other online repositories of research-protocol videos include Dnatube.com and SciVee.tv.[14] Based on such evidence, Vogel, along with Sonia Ben Ouagrham-Gormley of George Mason University, have concluded that the technical and socio-organizational hurdles involved in whole-genome synthesis pose a major obstacle to the ability of terrorist organizations to exploit this technology for harmful purposes.[15]

Some scholars, however, have come to the opposite conclusion of those who emphasize the hurdles associated with tacit knowledge. Members of this second school point to a contradictory trend in biotechnological development that they claim will ultimately prove stronger. They note that the evolution of many emerging technologies involves a process of de-skilling that, over time, reduces the amount of tacit knowledge required for their use. Chris Chyba of Princeton, for example, contends that as whole-genome synthesis is automated, commercialized, and “black-boxed,” it will become more accessible to individuals with only basic scientific skills, including terrorists and other malicious actors.[16]

De-skilling has already occurred in several genetic-engineering techniques that have been around for more than twenty years, including gene cloning (copying foreign genes in bacteria), transfection (introducing foreign genetic material into a cell), ligation (stitching fragments of DNA together), and the polymerase chain reaction, or PCR (which makes it possible to copy any particular DNA sequence several million-fold). Although one must have access to natural genetic material to use these techniques, the associated skill sets have diffused widely across the international scientific community. In fact, a few standard genetic-engineering techniques have been de-skilled to the point that they are now accessible to undergraduates and even advanced high school students, and could therefore be appropriated fairly easily by terrorist groups.

Gerald Epstein, of the Center for Science, Technology, and Security Policy, writes that whole-genome synthesis “appears to be following a trajectory familiar to other useful techniques: Originally accessible only to a handful of top research groups working at state-of-the-art facilities, synthesis techniques are becoming more widely available as they are refined, simplified, and improved by skilled technicians and craftsmen. Indeed, they are increasingly becoming ‘commoditized,’ as kits, processes, reagents, and services become available for individuals with basic lab training.”[17] In 2007 Epstein and three co-authors predicted that “ten years from now, it may be easier to synthesize almost any pathogenic virus than to obtain it through other means,” although they did not imply that individuals with only basic scientific training will be among the first to acquire this capability.[18]

To date, the de-skilling of synthetic genomics has affected only a few elements of what is actually a complex, multi-step process. Practitioners of de novo viral synthesis note that the most challenging steps do not involve the synthesis of DNA fragments, which can be ordered from commercial suppliers, but the assembly of these fragments into a functional genome and the expression of the viral proteins. According to a report by the U.S. National Science Advisory Board for Biosecurity, a federal advisory committee, “The technology for synthesizing DNA is readily accessible, straightforward and a fundamental tool used in current biological research. In contrast, the science of constructing and expressing viruses in the laboratory is more complex and somewhat of an art. It is the laboratory procedures downstream from the actual synthesis of DNA that are the limiting steps in recovering viruses from genetic material.”[19]

Along similar lines, virologist Jens Kuhn has called for a more nuanced assessment of the technical challenges involved in de novo viral synthesis. He notes, for example, that constructing the polio virus from scratch was fairly straightforward because its genome is small and consists of a single positive strand of RNA that, when placed in a cell-free extract, spontaneously directs the production of viral proteins, which then self-assemble to yield infectious viral particles. By contrast, the genomes of negative-strand RNA viruses, such as Ebola or the 1918 strain of influenza, are not infectious by themselves but require the presence of viral helper proteins, which must be synthesized and present in the host cells in the right numbers. Because such reverse-genetic systems are relatively difficult to create, only a limited number of scientists have the requisite skills and tacit knowledge.[20]

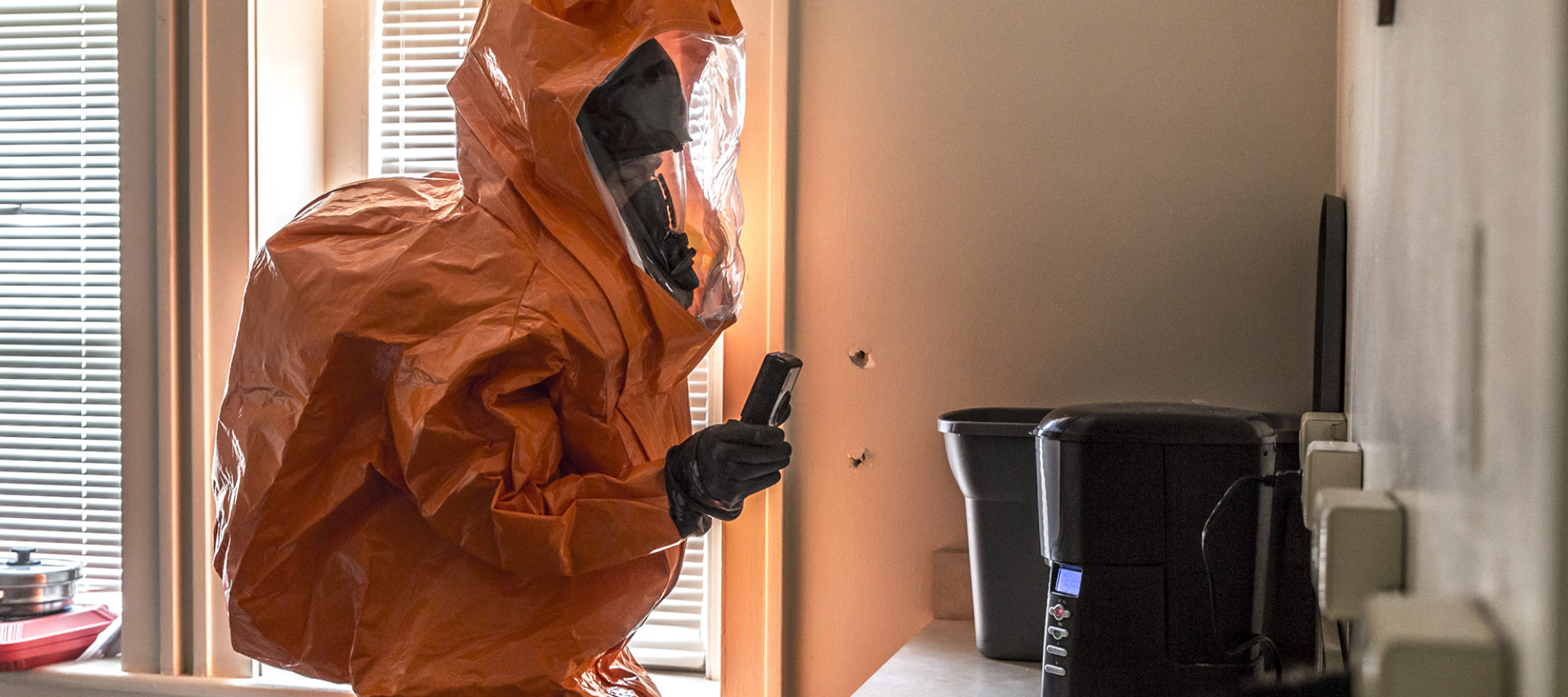

It is also important to note that developing and producing an effective biological weapon involves far more than simply acquiring a virulent pathogen, whether by isolating it from nature or synthesizing it from scratch. Tacit knowledge also plays an important role in the “weaponization” of an infectious agent, which includes the following steps: (1) growing the agent in the needed quantity, (2) formulating the agent with chemical additives to enhance its stability and shelf life, (3) processing the agent into a concentrated slurry or a dry powder, and (4) devising a delivery system that can disseminate the agent as a fine-particle aerosol that infects through the lungs. According to Kuhn, “The methods to stabilize, coat, store, and disperse a biological agent are highly complicated, known only to a few people, and rarely published.” Thus, even if terrorists were to synthesize a viral agent successfully, “they will in all likelihood get stuck during the weaponization process.”

The debate over de-skilling has focused not only on whole-genome synthesis but also on the related but broader field known as “synthetic biology.” Despite the overlap between these two disciplines, there are important differences. Whereas synthetic genomics is an “enabling” technology that makes possible many other technological applications, synthetic biology is an umbrella term that covers several distinct research programs. Two prominent and outspoken scientists, Thomas Knight of M.I.T. and Drew Endy of Stanford, advocate a particular synthetic-biology paradigm that aims to facilitate biological engineering through the development of a “tool kit” called the Registry of Standard Biological Parts. These parts, also known as “BioBricks,” are pieces of DNA with known protein-coding or regulatory functions that behave in a predictable manner and have a standard interface. In principle, such parts can be joined together to create functional genetic “circuits,” much as transistors, capacitors, and resistors are assembled into electronic devices. A major goal of parts-based synthetic biology is to design and build genetic modules that will endow microbes with useful functions not found in nature, such as the ability to produce biofuels or pharmaceuticals.

At least in theory, the use of standard genetic parts and modular design techniques should significantly reduce the need for tacit knowledge in the construction of synthetic organisms. As Gautam Mukunda, Kenneth A. Oye, and Scott C. Mohr of M.I.T. and Boston University have argued, “De-skilling and modularity … have the potential to … decrease the skill gradient separating elite practitioners from non-experts.”[21] Nevertheless, not everyone in the synthetic biology community has bought into the standardized-parts approach, and some believe that it is destined to fail — or, at the very least, not to live up to its ambitious claim of providing a simple and predictable way to design and build artificial genomes. One problem is that many biological parts have not been adequately characterized, so their activity varies depending on cell type or laboratory conditions, and some parts do not function optimally, or at all, because they are incompatible with the biochemical machinery of the host cell.[22]

In other cases, the characteristics of individual biological parts may be well understood, but the parts do not behave as expected when combined as an intended functional module. Indeed, even fairly simple genetic circuits tend to be “noisy,” operating stochastically rather than predictably. Furthermore, as the size of synthetic biological constructs increases, nonlinear interactions among the genetic and epigenetic elements may become increasingly difficult to predict or control, resulting in unexpected behaviors and other emergent properties. It is therefore conceivable that large genetic constructs could pose safety hazards that are impossible to predict in advance. (This possibility was discussed in these pages by Raymond Zalinskas and the author; see “The Promise and Perils of Synthetic Biology,” Spring 2006.[23]) In sum, although certain aspects of parts-based synthetic biology may well become more accessible to non-experts, the field’s explicit de-skilling agenda is far from becoming an operational reality.

Another element in the agenda of parts-based synthetic biology, as conceived by Knight and Endy, is to make the Registry of Standard Biological Parts freely available to interested researchers without patents or other restrictions. Over 130 academic labs now participate in the Registry community. An important vehicle for this “open-access biology” movement is the International Genetically Engineered Machine competition (iGEM), held annually at M.I.T. by the BioBricks Foundation.[24] The goals of iGEM are “to enable the systematic engineering of biology, to promote the open and transparent development of tools for engineering biology, and to help construct a society that can productively apply biological technology.”[25] Starting in 2003 with a small group of student teams from American universities, iGEM has since become a global event: in 2010, 118 teams from 26 countries participated.[26] Nevertheless, many of the teams have had trouble creating or using biological parts that work reliably and predictably in different contexts.

In May 2008, a group of amateur biologists in Cambridge, Massachusetts, launched another open-access initiative called DIYbio (“do-it-yourself biology”) with the goal of making biotechnology more accessible to non-experts, including the potential use of synthetic-biology techniques to carry out personal projects.[27] DIYbio has since expanded to other U.S. cities as well as internationally, with local chapters in Bangalore, London, Madrid, and Singapore.[28] Although the group’s technical infrastructure and capabilities are still rudimentary, they may become more sophisticated as gene-synthesis technology matures.

Some observers contend that the de-skilling and open-access agendas being promoted by iGEM and DIYbio will unleash a wave of innovation as a growing number of people from different walks of life acquire the ability to engineer biology for useful purposes. According to a team of social scientists affiliated with the Synthetic Biology Engineering Research Center (SynBERC) at the University of California, Berkeley, “The good news is that open access biology, to the extent that it works, may help actualize the long-promised biotechnical future: growth of green industry, production of cheaper drugs, development of new biofuels and the like.”[29] Extrapolating from these trends a few decades into the future, the physicist Freeman Dyson published a controversial article in 2007 envisioning a world in which synthetic biology has been de-skilled to the point that it is fully accessible to amateur scientists, hobbyists, and even children:

There will be do-it-yourself kits for gardeners who will use genetic engineering to breed new varieties of roses and orchids. Also kits for lovers of pigeons and parrots and lizards and snakes to breed new varieties of pets. Breeders of dogs and cats will have their kits too…. Few of the new creations will be masterpieces, but a great many will bring joy to their creators and variety to our fauna and flora. The final step in the domestication of biotechnology will be biotech games, designed like computer games for children down to kindergarten age but played with real eggs and seeds rather than with images on a screen. Playing such games, kids will acquire an intimate feeling for the organisms that they are growing. The winner could be the kid whose seed grows the prickliest cactus, or the kid whose egg hatches the cutest dinosaur.[30]

Whether such rosy predictions come true will depend on, among other things, the degree to which synthetic biology is de-skilled in the future. Looking at the historical record, scientific claims about de-skilling have been made repeatedly in the past but have often failed to materialize. For example, Helen Anne Curry, a graduate student in the history of science at Yale, has studied the development of plant-breeding techniques from 1925 to 1955. She found that during this period, agricultural interests promised that the use of radium, x-rays, and chemicals to generate genetic mutations would facilitate the creation of new and useful plant varieties, and that these methods would soon become available to amateur gardeners. But in fact, although the breeding techniques did result in novel varieties of roses and orchids, the predictions about de-skilling never came to pass.[31]

In addition to the potential benefits of de-skilling and open access, a number of commentators have warned that the democratization of synthetic biology could give rise to new safety and security risks. One concern is that substantially expanding the pool of individuals with access to synthetic-biology techniques would inevitably increase the likelihood of accidents, creating unprecedented hazards for the environment and public health.[32] Even Dyson’s generally upbeat article acknowledges that the recreational use of synthetic biology “will be messy and possibly dangerous” and that “rules and regulations will be needed to make sure that our kids do not endanger themselves and others.”

Beyond the possible safety risks, Mukunda, Oye, and Mohr warn that the de-skilling of synthetic biology would make this powerful technology accessible to individuals and groups who would use it deliberately to cause harm. “Synthetic biology,” they write, “includes, as a principal part of its agenda, a sustained, well-funded assault on the necessity of tacit knowledge in bioengineering and thus on one of the most important current barriers to the production of biological weapons.”[33] Drawing on the precedent of “black-hatted” computer hackers, who create software viruses, worms, and other malware for criminal purposes, for espionage, or simply to demonstrate their technical prowess, some have predicted the emergence of “bio-hackers” who engage in reckless or malicious experiments with synthetic organisms in basement laboratories.[34] Such nightmare scenarios are probably exaggerated, however, because the effective use of synthetic biology techniques relies on socio-technical resources that are not generally available to hobbyists. According to Andrew Ellington, a biochemistry professor at the University of Texas, “There is no ‘Radio Shack’ for DNA parts, and even if there were, the infrastructure required to manipulate those parts is non-trivial for all but the richest amateur scientist.”[35]

Indeed, when assessing the risk of misuse, it is important to distinguish among potential actors that differ greatly in financial assets and technical capabilities — from states with advanced bio-warfare programs, to terrorist organizations of varying size and sophistication, to individuals motivated by ideology or personal grievance. The study of past state-level bio-warfare programs, such as those of the Soviet Union and Iraq, has also shown that the acquisition of biological weapons requires an interdisciplinary team of scientists and engineers who have expertise and tacit knowledge in fields such as microbiology, aerobiology, formulation, and delivery.[36] States are generally more capable of organizing and sustaining such teams than are non-state actors.

Conceivably, the obstacles posed by the need for personal and communal tacit knowledge might diminish if a terrorist group managed to recruit a group of scientists with the required types of expertise, and either bribed or coerced them into developing biological weapons. But Vogel and Ben Ouagrham-Gormley counter this argument by noting that even in the unlikely event that terrorists could recruit such a scientific A-team, its members would still face the challenge of adapting the technology to a local context.[37] Dysfunctional group dynamics, such as a refusal by some team members to work together, would also create obstacles to interdisciplinary collaboration in areas requiring communal tacit knowledge.

Taking such factors into account, Michael Levi of the Council on Foreign Relations has questioned the ability of terrorists to construct an improvised nuclear device from stolen fissile materials. He notes that the process of building a functional weapon would involve a complex series of technical steps, all of which the terrorists would have to perform correctly in order to succeed.[38] The same is true of assessing bioterrorism risk: one must examine not only the likelihood of various enabling conditions, but also the probability that all of the steps in the weapon development process will be carried out successfully.

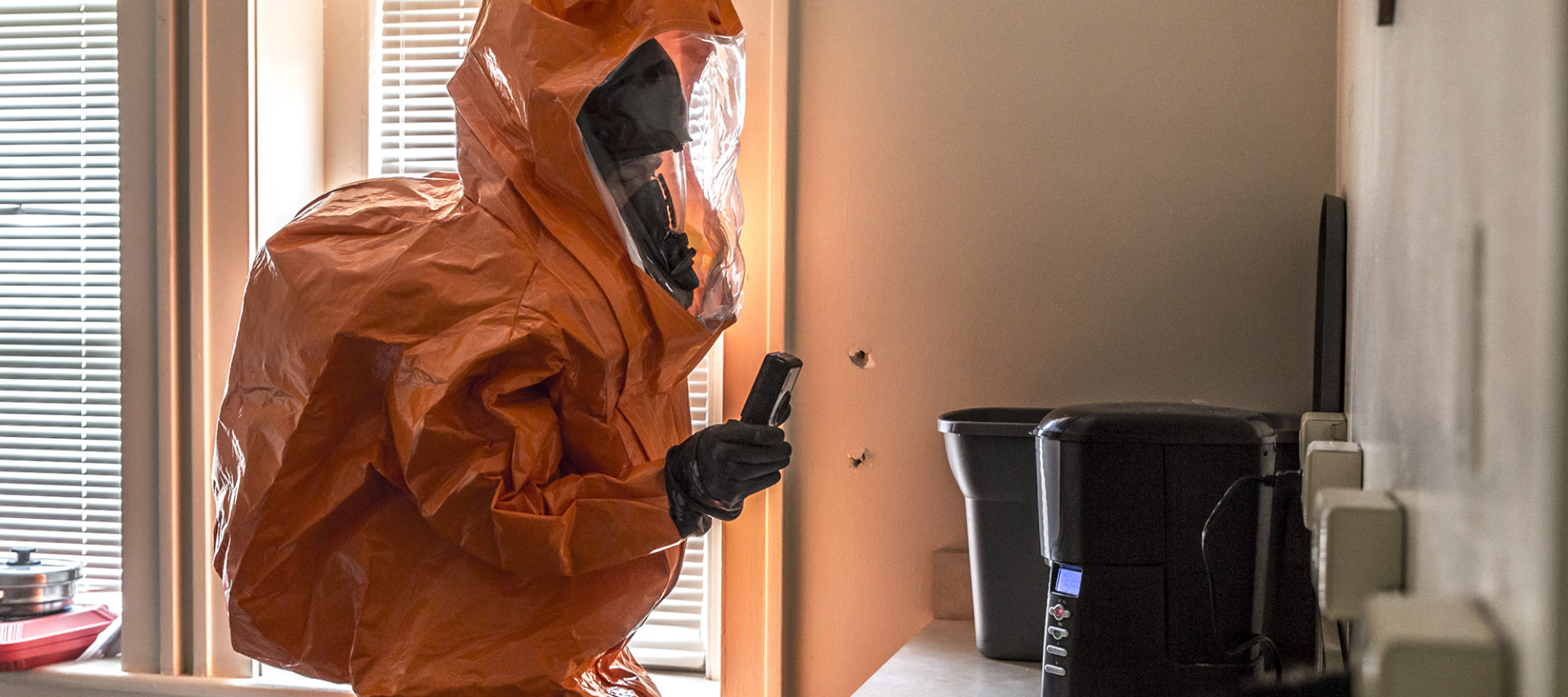

Finally, problem-solving is crucial to the mastery of any complex technology. Biotechnologists must be creative and persistent to overcome the technical difficulties that inevitably arise during the development of a new process. Thus, a key variable affecting the risk that terrorists could exploit synthetic biology for harmful purposes would be their ability to perform multiple iterations of a technique until they get it right, a requirement that presupposes a stable working environment and ample time for experimentation. Such amenities would probably be lacking, however, for individuals working in a covert hideaway or conducting illicit activities (such as the synthesis and weaponization of a deadly virus) in an otherwise legitimate laboratory.

Whether commercial kits and automation will merely make it easier for experienced scientists to perform certain difficult or tedious operations more quickly and easily, or whether de-skilling will truly make advanced biotechnologies available to non-experts — particularly those with malicious intent — is still an open question and will probably remain so for some time. To resolve the debate over the extent to which terrorists could misuse synthetic biology to cause harm, it is important to determine whether de-skilling affects those aspects of the technology that currently require personal or communal tacit knowledge.

Preliminary evidence suggests that de-skilling does not proceed in a uniform manner but affects some biotechnologies more than others. A number of techniques have proven resistant to de-skilling for the reasons mentioned, including the complexity of biological organisms and the critical role of tacit knowledge and other socio-technical factors. Moreover, although scientists commonly use genetic-engineering “kits” containing all of the materials and reagents required for a particular laboratory procedure, these kits do not necessarily remove the need for tacit knowledge when applied in the context of a particular experiment.[39]

Instead of making assertions based on anecdotal evidence about whether or not synthetic biology will become de-skilled and accessible to non-experts, it would be more useful to conduct empirical research on the nature of tacit knowledge and the process of de-skilling. Shedding new light on the debate will require addressing several questions about the role of tacit knowledge and other socio-technical factors in biotechnological development: First, what are the specific conditions, skills, and socio-organizational contexts that are required for advanced biotechnologies to work reliably? Second, why do certain tools, techniques, and practices of biotechnology become de-skilled, while others do not? Third, what are the conditions, both technical and social, that facilitate or hamper the process of de-skilling?

Possible methodological approaches for answering these questions include the analysis of past efforts to transfer complex technologies from one laboratory setting to another, in-depth interviews with practicing scientists about the role of tacit knowledge and other socio-technical factors in their research, and the close ethnographic observation of laboratory work. Such studies should permit a more nuanced assessment of the safety and security risks associated with synthetic biology and other emerging biotechnologies, and will help policymakers determine which areas warrant oversight or regulation to prevent deliberate misuse.

Exhausted by science and tech debates that go nowhere?