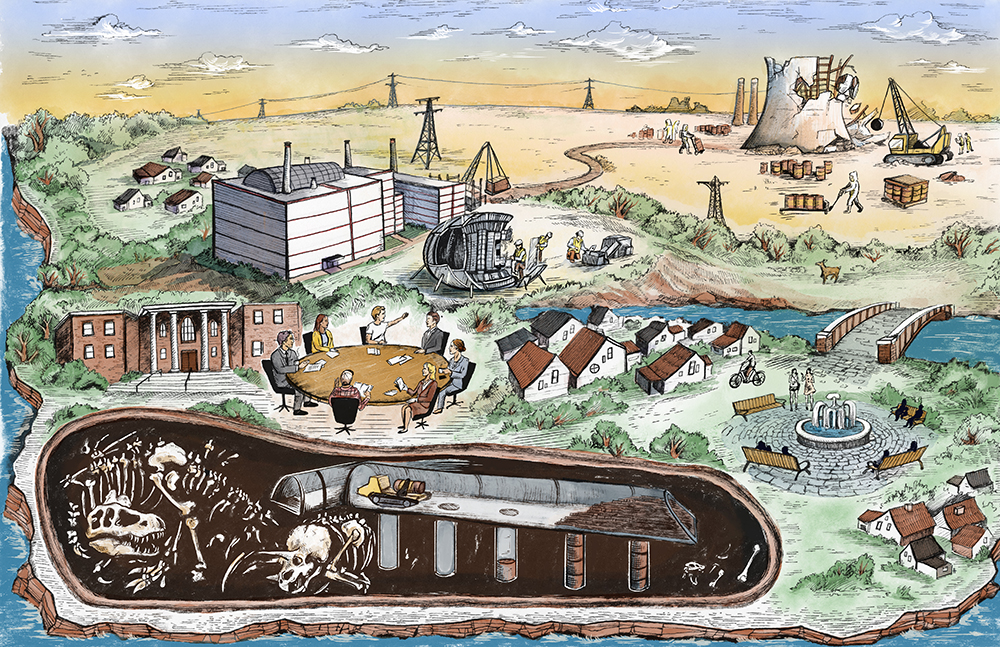

America’s nuclear energy situation is a microcosm of the nation’s broader political dysfunction. We are at an impasse, and the debate around nuclear energy is highly polarized, even contemptuous. This political deadlock ensures that a widely disliked status quo carries on unabated. Depending on one’s politics, Americans are left either with outdated reactors and an unrealized potential for a high-energy but climate-friendly society, or are stuck taking care of ticking time bombs churning out another two thousand tons of unmanageable radioactive waste every year.

The consequences of continued stalemate are significant. U.S. nuclear electricity generation has not increased in thirty years, and the average plant is almost forty years old. Deadlines set by the Intergovernmental Panel on Climate Change to act to avoid the worst climate disruptions from carbon dioxide emissions loom ever closer. At the same time, many researchers maintain that most nuclear plants are still far from safe, that repeats of disasters like Fukushima or Chernobyl are not a matter of if but when.

Why can’t we resolve this debate? Why have we been having the same basic argument for fifty years, without any fundamental redesigns to the technology since the 1970s to show for it?

Advocates claim that the fault lies with opponents who refuse to listen to the science: “Democrats’ irrational fear of nuclear power has contributed to the destruction of the environment,” wrote The New Republic’s Eric Armstrong in 2017. Psychologist Steven Pinker has been particularly outspoken, recently claiming that “unfounded fears” explain the public’s nuclear hesitance. And Mark Lynas has portrayed nuclear opposition as rooted in “conspiratorial thinking.”

Environmental activist and “ecomodernist” Michael Shellenberger, perhaps the most prominent American advocate today of nuclear power, offers dizzying arrays of factoids and charts, and has gone so far as to label skeptics “enemies of the earth” who are waging a war on prosperity.* The website for his organization, Environmental Progress, bombards readers with facts that are disconnected, out of context, poorly explained, and of questionable relevance. A chart noting that nuclear plants require less land area than wind farms stands next to another boasting that all of America’s nuclear waste could be stacked fifty feet high on a football field, and another bemoaning America’s abysmal energy storage capacity.

Shellenberger has characterized South Korea’s decision to restart construction of two nuclear reactors as “choosing wisdom over ideology.” And he has argued against developing new designs, because “the more radical the innovation, the higher the cost.” But opposing new designs also means that he opposes safer designs, on the grounds that creating them would be too high a barrier to rapidly scaling nuclear power. He pairs this rosy take about nuclear power with pessimism about renewables, which he dismisses as useful only if our goal is to return to agrarian, pre-modern life.

Is nuclear skepticism really anti-science? Has the time come for opponents to get over themselves and toe the scientific line?

This is too simple a way to think about the challenges posed by nuclear power. Nuclear physicist Alvin Weinberg, writing in 1972, found so many difficulties with estimating the health effects of radiation and the safety of nuclear power that he coined a new term, trans-science, to distinguish it from areas where prediction and clear answers are possible. How is it that Michael Shellenberger and Steven Pinker are able to cut through the messy complexities of nuclear power to uncover the pure, evidential truth?

The answer is that they have not actually been able to. The story about nuclear power offered by its most vocal advocates is not scientific but scientistic. It is a story about technocrats shoving aside the public, cherry-picking studies with the most favorable assumptions built in, and declaring as “safe” a reactor design that inherently tends toward catastrophe. The language of Shellenberger and Pinker echoes the foolhardy enthusiasm of the atomic age, when advocates dismissed skeptics of even the most fanciful projects — like nuclear-powered jet planes — as lacking sufficient vision. We submit to this rhetoric at our peril.

Yet it is also premature to abandon nuclear power wholesale. How then can citizens and policymakers move past the stalemate to develop nuclear technologies that benefit more people more of the time? By examining the technocratic roots of the current technology and how scientism holds us back, we outline a path to bring democracy back to the debate, allowing societies to realize the best that nuclear energy has to offer.

If we cannot make headway on nuclear power — and do so democratically — there would seem to be little hope for similarly complex challenges: climate change, artificial intelligence, collapsing biodiversity, sending humans to Mars. We must end the nuclear stalemate. Whether we can is a crucial test for democracy, and for humanity.

Writing in Forbes last year, Michael Shellenberger wants us to believe that the “best-available science” shows that “nuclear energy has always been inherently safe.” Our current nuclear reactor designs, he insists, are the safest and surest path to a carbon-neutral future. Climate scientist James Hansen, accusing fellow environmentalists of being unscientific, says, “Their minds are made up, facts don’t matter much.” On the other side, Greenpeace’s Jim Riccio describes the nuclear project as being already dead, as if it were a matter of settled science: “We’ve known for a decade that nuclear power plants are the worst route to go to solve climate change.”

But the matter, again, is not so simple. The relevant science is more complex and uncertain than described by either hardline advocates or critics. Cited “facts” often end up being dubious clichés, or based on studies whose assumptions all but predetermine their conclusions.

Let’s begin with the claim about carbon emissions. Andrew Sullivan repeats a common mantra when he writes in New York Magazine that nuclear power is a “non-carbon energy source.” That is true — sort of. Power plants do not emit carbon dioxide while they are running. But the construction of the plant itself emits carbon. So do the mining and refining of uranium — emissions that might considerably worsen if nuclear power were to expand around the world, requiring mining ore with lower uranium densities. Under one scenario offered by the Oxford Research Group, a London think tank, as nuclear power generation increases while ore quality decreases, by 2050 the carbon output per kilowatt-hour of the nuclear power industry could be the same as the natural gas power industry.

Such estimates are hardly unassailable. How reliable is our information about uranium reserves and mining technology? Which emissions should we count as being part of the technology? How do we project future reliance on nuclear energy? Estimates of emissions from nuclear power depend in large part on how one answers such questions, which is why these estimates, according to a 2014 paper, vary over a factor of a hundred. Another paper found that estimates vary over a factor of two hundred.

But there is even greater uncertainty from the many cataclysms and possible side effects that can arise from splitting the atom. Most estimates, reasonably enough, do not factor these in. For instance, they typically do not account for post-accident cleanup and protection, such as the mile-long, thirty-meter-deep ice wall that Japan uses to contain toxic water from Fukushima’s damaged reactors. They do not factor in the climate impact from a possible nuclear conflict made more likely by uranium enrichment and plutonium production. And the carbon footprint of long-term storage is still anyone’s guess; it will be considerably worse if future generations have to dig up and remediate storage sites that fail to remain inert for ten thousand years.

This kind of problem shows up across seemingly every aspect of estimating the safety of nuclear power. And yet, advocates are adamant. Sir David King, the U.K. government’s former top climate scientist, asked after the Fukushima accident: “Is there safer power than nuclear energy historically? No.”

But what does it even mean to say that a technology is safe? Consider Shellenberger’s claim that most of Fukushima is less radioactive than Colorado, which has high levels of uranium in the ground but where residents have low rates of cancer compared to other states. This claim is profoundly simplistic, as it ignores the mode of contact. There are three types of nuclear radiation: alpha, beta, and gamma. Alpha and most beta particles can’t penetrate the skin but are exceptionally dangerous when inhaled or ingested. Gamma particles penetrate and thus damage the body directly.

The Japanese have to deal primarily with cesium contamination, which emits gamma and beta radiation. Present in the soil and water, cesium has numerous pathways for ingestion: drinking water, absorption into rice produced in the region, and ingestion by cows or other animals that are then eaten. Such contamination can also bioaccumulate in unexpected ways, something that caught British scientists off guard in the aftermath of the Chernobyl disaster.

By contrast, the main hazard posed by Colorado’s naturally occurring ground uranium is its decay product radon, which emits alpha particles. A gas that escapes from the soil and can contribute to lung cancer through inhalation, radon can be effectively mitigated indoors through ventilation systems. Moreover, the clearest known hazard of background radiation is in causing thyroid cancer, whereas a state’s overall incidence of cancer is typically dominated by breast, lung, and prostate cancer, and is highly driven by its rate of smoking (which is relatively low in Colorado). The connection between background radiation and total cancer rates is thus tenuous, and sweeping comparisons between them are deceptive.

Or take another of Shellenberger’s claims, that “radiation from Chernobyl will kill, at most, 200 people.” He arrives at this extremely low number based on a 2008 U.N. report, by adding up how many staff and first responders died at the time of the accident; how many more of them have died of potentially related causes since; and a projection of how many people in the affected area will die of radiation-induced thyroid cancer within eighty years of the accident (apparently using an optimistic reading of the disease’s five-year survival rate in the United States).

Shellenberger evidently assumes that only directly attributable deaths should count, resulting in the lowest possible estimate. But there are reasonable arguments for assuming otherwise.

For instance, nuclear weapons expert Lisbeth Gronlund, writing for the Union of Concerned Scientists, argues that the problem with many low Chernobyl estimates is that they look only at the population suffering the greatest exposure, not at the whole population affected by radiation — which includes much of the northern hemisphere and all of Europe. With a large population, even a tiny increase in cancer rates can mean thousands more deaths. Using global contamination rates from a different part of the same U.N. report underlying Shellenberger’s numbers, Gronlund calculates an excess of at least 27,000 cancer deaths.

Other analyses reveal how entangled these estimates are with politics. The highest estimate of Chernobyl deaths, 985,000, comes from a 2007 Russian publication. The authors have a clear bone to pick with former Soviet officials, claiming that “problems complicating a full assessment of the effects from Chernobyl included official secrecy and falsification of medical records by the USSR.” Having framed official records as untrustworthy, the authors present their own data as more reliable. They assume that the death of every member of the Chernobyl cleanup crews (some 125,000 deaths at the time of the report) was the result of the accident, and that the difference between mortality rates among cleanup crews and the rest of the affected area can be extrapolated to distant communities.

Shellenberger and the Russian authors are both extreme examples, but it is notable that their estimates vary by a factor of five thousand. A more common mid-range estimate of four thousand deaths comes from a report by the Chernobyl Forum, composed of several U.N. and other agencies. But this estimate also looks only at the people living in the most contaminated area. There is no reason to believe that a seemingly “moderate” mid-range estimate is any less value-laden than an extreme one.

We find the same divergence in estimates about nearly every other key factor of nuclear power. Cost is another instructive example. Advocates will often tout how cheap it is to produce nuclear power, while neglecting the enormous expense of building the plants in the first place. But is the high cost of the plants a factor of the technology itself — or just of the American regulatory process? When comparing to renewables, do we factor in that renewables require the extra expense of updating the power grid and building backup plants and energy storage to compensate for their intermittency? Exactly how much does ten thousand years of nuclear waste stewardship cost?

Different assumptions result in dramatically different estimates, but every estimate must make some such assumptions. These will be based on values, preferences, and biases that may each be rational and defensible. Underestimating deaths, say, may be better if we prefer to include only figures of which we are absolutely confident, overestimating may be better if we prefer to err on the side of caution — but in any case, no study can hope to be value-free. We will likely never be able to know as definitively as some claim how many people died from the Chernobyl disaster, or the true costs of nuclear power.

Decisions about how to factor in all these possibilities and uncertainties can never be entirely objective. This is the predicament that Alvin Weinberg recognized fifty years ago. When it comes to questions about the dangers of chronic low-level radiation, the risks of rare catastrophes, or what energy source will best serve humanity in the future, science alone cannot make these decisions, and the claim that science does will not make it so. These matters are trans-scientific.

The problem is not that the science has not been done yet, but rather that it may be undoable. Some questions may simply exceed the reach of our methods. For other issues, we may lack the resources or wherewithal to adequately answer them, or we may discover that any solution is inextricably intertwined with our values. Weinberg warned that scientists had “no monopoly on wisdom where … trans-science is involved.” The best they can offer is the injection of additional intellectual rigor to the debate and a delineation of where science ends and trans-science begins.

In a morass of uncertainty, it can be comforting to believe that one’s chosen position is supported by the true facts. Rather than nuclear politics being beset by too little science, we actually suffer from what Daniel Sarewitz has called an “excess of objectivity.” Given the high stakes, the research is invariably politicized, and any study will face sufficient scrutiny that it is almost guaranteed not to settle the matter. The relevant sciences cannot settle the political question, but actually preclude an answer.

None of this is to say that science is unhelpful here. Even imperfect estimates are better guides than none at all. Rather, the problem lies with the way “the facts” are used, and science is weaponized, in political debate.

In the claim that nuclear power is “inherently safe,” much of the trouble is with the word inherent. Even disregarding the wildly divergent estimates of exactly how safe nuclear power is, the attempt to measure “inherent safety” at all suggests that it is some discernible internal property of the technology — like the theoretical efficiency of a particular steam engine design.

But in much the same way that the facts about nuclear accident deaths depend upon non-scientific assumptions, nuclear safety is bound up in contingencies of social organization, culture, personality, and geography.

Take, for instance, Michael Shellenberger’s point that the death toll from Chernobyl was lower than that from the 1975 Banqiao dam disaster in China. Chernobyl was in a relatively sparsely populated area, while the Banqiao dam sat above a massive population center. So rather than demonstrate the relative safety of nuclear compared to hydroelectric, this example only demonstrates that power plants in general are safer when located far from large populations.

Moreover, the light-water reactor (LWR) technology that powers most nuclear plants faces the ever-present risk of a runaway meltdown, demanding immense levels of vigilance, communication, and care. As one article, appropriately titled “Operating as Experimenting,” puts it, “plant managers acknowledge that ‘Something unexpected is always happening’ — pumps fail, software crashes, pipes break.” The light-water reactor is a complex technology: Its systems are highly interrelated, prone to regular errors, and can have unexpected synergistic relationships. All of this means that failures can quickly cascade into serious disasters.

This built-in instability of light-water reactors demands a great deal from the people and organizations in charge of them. No single engineer knows or can know exactly how all the systems in the plant work, and operators who maintain the safety of nuclear reactors must constantly try to test hypotheses as things go wrong.

At Three Mile Island in 1979, a series of events led to an accidental drop in the level of water that cools the reactor core, increasing the heat and thus the pressure in the cooling system. Among other issues, a valve that had opened automatically to release pressure never closed again. But operators falsely interpreted this loss as a leak they had already identified earlier. Two hours into the accident, a partial meltdown and a leak of radioactive water had become all but inevitable.

Creeping complacency and production pressures can also sometimes lead operators and managers to overlook or downplay what appear to be minor abnormalities. As one paper explains, even during an “alarm avalanche” — an overwhelming series of alarms in a short period of time — operators sometimes simply ignore warning signals, partly because the operators know that analyzing them may not offer much benefit, and also because they don’t have time to do so as they try to respond. Keeping up is made more difficult for light-water reactors because they cannot be shut down. Operators must strive to maintain constant coolant flow to prevent meltdown — even when the plant is turned off.

Because of the complexity and large scale of LWRs, operating them is more like managing an experiment than building a bridge. Keeping a reactor from melting down, or trying to prevent the spread of radiation after an accident, is akin to rope-a-dope, when a boxer hangs on the ropes, hoping to tire out the opponent. It requires attentiveness and agility to duck or block every punch, and a single poorly-timed error in judgment is all it takes to get knocked out. But for LWR plant operators, the opponent never tires. Instead, as the reactor ages and becomes less stable, the punches it throws become harder and more frequent.

A 2016 Union of Concerned Scientists report found that U.S. plants the previous year experienced ten “near misses” — “problems that may increase the chance of a reactor core meltdown by a factor of 10 or more.” Even a track record this good is not guaranteed. How much of our relative success in playing nuclear rope-a-dope has been due to ability and how much to sheer luck is impossible to know.

The limited harm from nuclear disasters so far owes much less to the safety of light-water reactors themselves than to the willingness of policymakers and managers to constantly expend resources to keep them safe, to spend whatever it takes to play rope-a-dope indefinitely. As an article in Wired put it, “The nuclear industry is safe because every plant consumes billions of dollars in permitting, inspections, materials, and specialized construction decades before producing its first jolt of current.” By the same token, running the plants safely and containing radiation in the event of an accident has more to do with management and politics than with the technology.

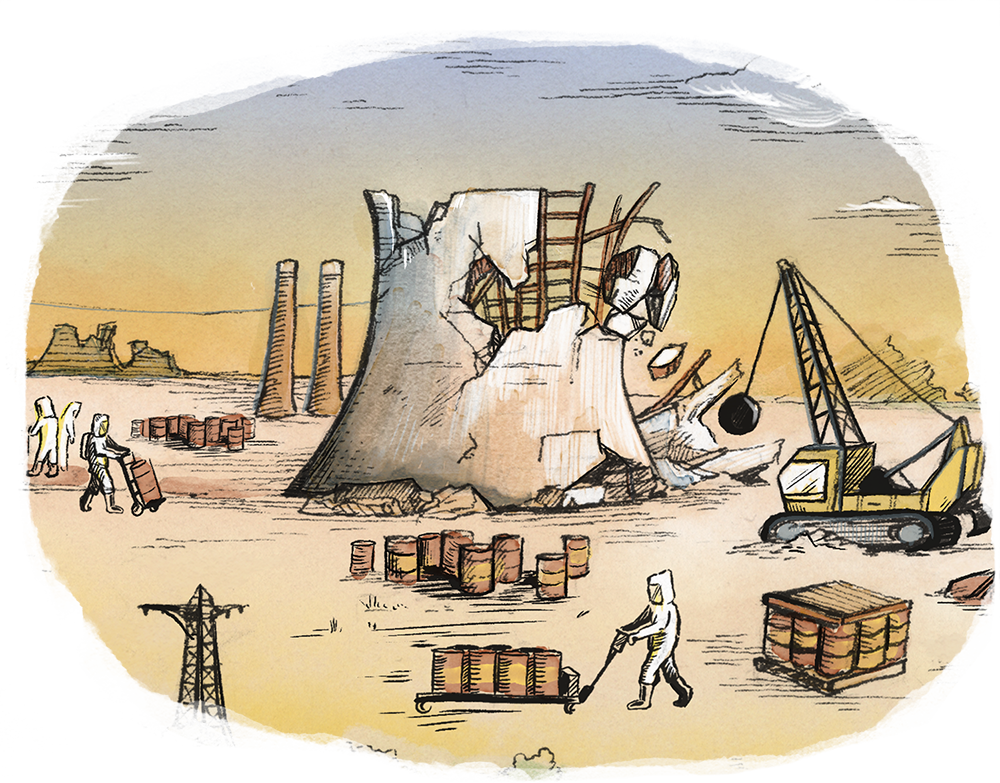

Radiation from the 2011 Fukushima disaster, caused by a tsunami, was eventually contained with a massive ice wall, built around the damaged plant at a cost of $324 million. Overall, ensuring that the harms are minimized has cost Japanese taxpayers $188 billion. The plant’s manager has been praised for his remarkable response in the immediate aftermath. After the initial evacuation, only about fifty essential workers returned to stabilize the reactors.

So far as we know, Fukushima’s human toll has been small: As of 2018, radiation had caused cancer in perhaps a handful of workers, one of whom had died. But these low numbers may be deceiving. Subcontractors enlisted armies of homeless men to clean and contain the radiation at less than minimum wage. Their employment was off the record, and any health effects on them have not been tracked.

Individual plant managers and the nuclear industry as a whole have realized substantial safety gains, and ongoing learning from major incidents has led to a gradual drop in the accident rate. But assuring safety for an unpredictable technology is incredibly expensive. The Diablo Canyon nuclear plant in California has been touted as a noteworthy case of a “high-reliability organization,” where elevated levels of communication and a vigilant safety culture consistently avoid disaster in a high-risk environment. But the plant takes over seven times as many employees and outside consultants to run as a fossil-fuel station with a similar power output.

Even for high-reliability organizations, future safety is not guaranteed by past success. As one paper put it, these kinds of institutions are “unlikely, demanding and at risk.” Organizations are often unwilling to commit the necessary funds or risk production slowdowns. And rather than proving permanent gains, past successes may well breed complacency.

The question is not whether light-water reactors are safe, but whether the social and political organizations behind them can be.

All around us today there is handwringing about the emergence of a “post-truth” era. Scientific facts, one hears, should determine politics. We call this idea political scientism. It seems to drive every side of contemporary political debates, not just about nuclear energy.

In the debate over anthropogenic climate change, skeptics talk just like believers: they claim that their own experts, such as Judith Curry or John Christy, are uniquely objective in their interpretation of atmospheric science data. To them, the believers are ideologically driven alarmists. Likewise, to advocates of “common sense” gun control, it seems indisputable that shrinking the size of magazine cartridges or pushing bans à la post–Port Arthur, Australia, will end America’s epidemic of gun homicides. To opponents, the apparent failure of gun restrictions in Chicago and Washington, D.C. has already proven these policies worthless. The debates over lockdowns and masks during the Covid-19 pandemic provide more sad ongoing examples.

Despite these being immensely complex problems, near absolute surety is somehow not in short supply. When empirical knowledge is seen as determinative of politics, the outcomes of any policy change are seen as knowable in advance. Perhaps the challenge of contemporary politics is not that expertise has been shoved aside by emotion but that political discourse is beset by a simplistic view of what expertise can accomplish.

As Daniel Sarewitz has argued, the political process is gridlocked by this tendency to hide behind “science,” “facts,” and “expertise.” Politics is no longer recognized as a never-ending dynamic process of negotiation, compromise, realignment, and experiment among a diversity of competing interest groups, but instead is expected to be a straightforward implementation of unassailable Truth.

This view obscures the very values that really drive people’s political positions. And if politics is simply a matter of believing the facts, then those we disagree with come to be seen as too ignorant, brainwashed, or ideologically corrupted to be worthy of engagement. The result is that negotiation grinds to a halt.

If we truly lived in a “post-truth” era, we would not see nearly every partisan group strive so strenuously to depict itself as in better possession of the relevant facts than its opponents. We would not deride political enemies as too encumbered by emotional and ideological blinders to see what “science” tells us must be done.

This is where most diagnoses of America’s political morass get things wrong. The image that best represents our political era is not the ignorant rube with his fingers in his ears but polarized factions captivated by their own crystal balls.

This is precisely the situation we find in the debate over nuclear energy. The technology is either viewed as unalloyed progress or a recipe for disaster. Partisans choose one reading from a morass of uncertain and conflicting data and call it “the facts.” Contrary interpretations are dismissed as ignorant, emotional, or ideological. The result is decades of gridlock.

The point is especially true of nuclear advocates, but not only of them. Skeptics, too, often ignore or underemphasize the underlying values, also treating the debate as if it were mostly a matter of lining up the right facts. At a 2016 town hall on climate change, Senator Bernie Sanders declared that “scientists tell us” carbon neutrality is achievable without nuclear energy, much in the same way that Senator Cory Booker, an advocate of next-generation reactors, insisted on dealing “with the facts and the data.” A researcher at the Union of Concerned Scientists, discussing the advantages of renewables over nuclear power, concludes, “before calling for a nuclear renaissance to address climate change, let’s take a closer look at the facts.”

The idea that there is an objectively correct policy option encourages a black-and-white worldview: The science is on my side; yours is nothing but sophistry and illusion. Political argument becomes about dividing the world into enlightened friends and ignorant heretics. Recall Michael Shellenberger’s label for nuclear opponents: “enemies of the earth.” This fanatic, scientistic discourse stands in the way of nuclear energy policy that is both intelligent and democratic.

If love or hate for nuclear power is not a matter of simply accepting the facts, then what does underlie it? As for most risky technologies, support is not a matter mainly of rationality but of trust, fairness, control, responsibility, and plain subjective aversion to catastrophe. Science writer Robert Pool, in his 1997 book Beyond Engineering, offered a helpful distinction between “builders” and “conservers.” While builders have immense faith in our capacity to technologically manipulate our environments for the greater good, conservers need considerably more evidence to be persuaded that people are capable of deploying a new technology responsibly.

The builder mentality, although probably more common among scientists and engineers, is not more scientific. It mostly reflects a difference in trust, something that is easier for the people doing the building. Everyone else moves along the builder–conserver spectrum based on a myriad of factors, including recent events and their own experiences.

Public confidence in nuclear power perhaps began to erode when the performance of early generations of nuclear plants turned out to not match rosy predictions of energy “too cheap to meter,” as Atomic Energy Commission chairman Lewis Strauss had promised in 1954. Three Mile Island, Chernobyl, and Fukushima reasonably aggravated fears about safety. Statistics and other factual appeals in support of nuclear now fall on deaf ears, not because the public is anti-science but because the technology and its advocates are no longer trusted.

Many scholars of risk, such as Paul Slovic and Charles Perrow, have pointed out why people perceive the risks of nuclear power differently than they do other technologies. Ecomodernists are right that the deaths caused by coal power are an ongoing tragedy, but for many people, apples-to-apples comparisons of body counts don’t matter much when there is the dreadful potential for larger, long-lasting catastrophes. Whether one is more afraid of dying in a plane crash or another 9/11 attack than in a car accident or by gun homicide is not something anyone really decides via statistics. The qualitative stakes are simply different.

We can see as much in how nuclear energy and nuclear weapons have been intertwined from the start. And support for one often tracks with support for the other — with self-proclaimed enthusiasm for peacefully harnessing the power of the atom too often bleeding over into ghoulish dismissal of the horrors of meltdowns and mushroom clouds. Chemist Willard Libby, a key figure on the Atomic Energy Commission and an architect of both the nuclear energy industry and of nuclear testing in the 1950s, famously remarked of bomb testing in Nevada, “People have got to learn to live with the facts of life, and part of the facts of life are fallout.” Libby and other scientists at the time used “sunshine units” as a measure of the effect of radiation on the body.

Another reason many people dread nuclear energy is that they do not control it. It is similar to other complex, high-power, centralized technologies like air travel. For many, driving a car is not as frightening as getting into a plane, even if it is statistically far more dangerous, because the driver has more control over how much risk she takes. In air travel, the aircraft designers, FAA regulators, and pilots choose the risk; passengers are just along for the ride. A nuclear meltdown is like a plane in a nosedive, with nearby residents the hapless passengers — but with consequences potentially far more dire.

Because nuclear technology is something developed and deployed by distant technical experts and politicians, out of sight of average citizens, popular support relies on public faith. As a 2016 paper notes, the Japanese public’s support for nuclear energy dropped precipitously after Fukushima, while belief that “the government and electric utility companies do not report the reality about [nuclear power] safety” spiked. Similarly, undergirding people’s level of support for nuclear power is their overall belief in the capacity of humans to fully understand and reliably operate complex and risky technologies.

Then there is the issue of fairness. Just as producing energy from burning coal deprives some citizens of their right to clean air, shifting to more nuclear power imposes burdens on many people who do not benefit from it, or even consent to it. Future generations, most notably, cannot be asked if they agree that the responsibility of stewarding nuclear waste for thousands of years is worth the climate benefits — whatever those benefits actually end up proving to be. Although the Eisenhower generation believed that they were passing on a great inheritance by building our national infrastructure around suburbs, cars, and highways, today’s young adults who prefer urban or rural life are left with more limited choices. Entrenched technology is one of the means by which the dead end up ruling over the living.

Consider also the Navajo of New Mexico, Arizona, and Utah, many of whom suffer from a condition called Navajo Neuropathy associated with contamination from uranium mining. Or consider the Yakama at the decommissioned Hanford plant in Washington state, who now have to deal with leaking waste from uranium enrichment programs. Should these communities expect to continue to bear the costs of nuclear prosperity without having any say in potential alternatives?

Finally, and perhaps most significantly, beliefs about nuclear power are unavoidably a proxy for divergent moral commitments about economic growth, our relationship to the planet, and the life well lived — in short, for whether we should favor more or less industrialized societies. The rhetoric of political scientism obscures how differing standards of sufficient evidence are in part a product of deeper commitments to values like combating climate change or concern for those who work at uranium mines.

These varying concerns cannot be flattened into some unitary view of “the facts,” and choosing how one weights them, or whether or not to admit them at all, is not a matter of whether one believes in science, progress, or truth. When hardline nuclear advocates ignore or dismiss them, they are making a value choice no less than are skeptics.

We have been here before. In 1964, a vice president of the Westinghouse Electric Corporation, John Simpson, advocated countering nuclear skepticism with broad education programs, contending that “the only weapon needed is truth — well-presented and widely disseminated.”

Today’s ecomodernist visions of a future of high energy, high consumption, and zero carbon echoes 1950s visions of an atomically powered utopia. Like today, these dreams were spurred by races against time — not a warming planet but a looming Soviet threat, the desire for peaceful nuclear technology, and the aspirations of General Electric and Westinghouse to gain a dominant market share.

Although the kinds of pressure and the visions of the future have changed, the risks of plunging ahead with a unitary view of value and truth remain similar.

Despite Simpson’s assurances that embracing nuclear power was a matter of simple truth, the reactor design that has dominated what Americans think of as nuclear power was chosen not because it was the theoretical best, or the design with the greatest democratic buy-in, but rather because of contingent political and economic factors of the immediate postwar era. These factors may seem to have little relevance to today’s debates, yet our debate, like the reactor design itself, is locked in by this legacy.

Understanding this history is crucial to recognizing the peril of scientistic discourse, and of what happens when we fail to respect the complexity of emerging technologies. It is a history of political failure — but one that provides lessons for how we might do better now.

Judging by the way mainstream discourse today speaks of “nuclear energy,” it is easy to miss that it is not a single technology but rather dozens of potentially feasible designs. The heat that powers a nuclear reactor could be produced by any sufficiently fissile material: natural uranium, enriched uranium, thorium, or plutonium. Possible coolants are numerous: Apart from ordinary water, one could also use borated water, molten sodium, various liquid salts, helium, or certain hydrocarbons. Adding further complexity to the design is the choice of moderator — the substance that controls the reactor by slowing down radioactive particles — such as deuterium and graphite. The coolant doubles as the moderator in some designs.

Many of these options were on the table in the United States prior to the 1950s. The Atomic Energy Commission (AEC) originally directed research into several types of reactors. Some used natural rather than enriched uranium, which could have disentangled civilian power generation from nuclear weapons. Another design type, the fast-neutron reactor, destroys much of its fuel, leaving less waste.

So how is it that a class of technologies with so many possible options was effectively winnowed down to one, seemingly inferior, design — the light-water reactor?

The story is told by Robert Pool in Beyond Engineering: How Society Shapes Technology (1997), by Joseph G. Morone and Edward J. Woodhouse in The Demise of Nuclear Energy: Lessons for Democratic Control of Technology (1989), and by Robin Cowan in “Nuclear Power Reactors: A Study in Technological Lock-in” (1990). In the United States, the development of nuclear power for civilian use was closely tied to its use in the military. After World War II, the Navy prioritized the development of nuclear-powered submarines to gain an advantage over the Soviets. And once the Soviets detonated a nuclear bomb in 1949, the U.S. Navy’s development of nuclear power became even more pressing.

The Atomic Energy Commission initially did not make a nuclear navy a high priority. But the Navy, convinced of the strategic importance of nuclear-powered submarines, appointed Captain Hyman Rickover as its liaison to the AEC. Rickover was seen as “hard-headed, even ruthless,” and likely to get the Navy’s way. He prevailed.

Soon, Rickover was the head of both the Naval Reactors Branch of the Bureau of Ships and of the AEC’s Division of Reactor Development. These dual positions gave Rickover essentially complete control over the development programs of both the Navy and the AEC. He had the final say over which technology would be used.

Rickover’s top priority, aside from fitting the reactor in a submarine, was developing it quickly enough to beat the Soviets. To achieve this goal he wanted designs that were compact and unlikely to show early problems. One candidate design was a reactor cooled by liquid-metal sodium. The other was a pressurized water reactor, a type of light-water reactor (so named to distinguish it from a reactor that uses heavy water, which has hydrogen atoms with an extra neutron).

As Cowan tells the story, Rickover’s preference for light-water reactors was shaped by a letter from nuclear physicist Walter Zinn to the effect that “on the basis of existing knowledge, light water seemed to be the most promising.” Rickover contracted Westinghouse to build a light-water reactor, while General Electric (GE) developed a sodium-cooled reactor.

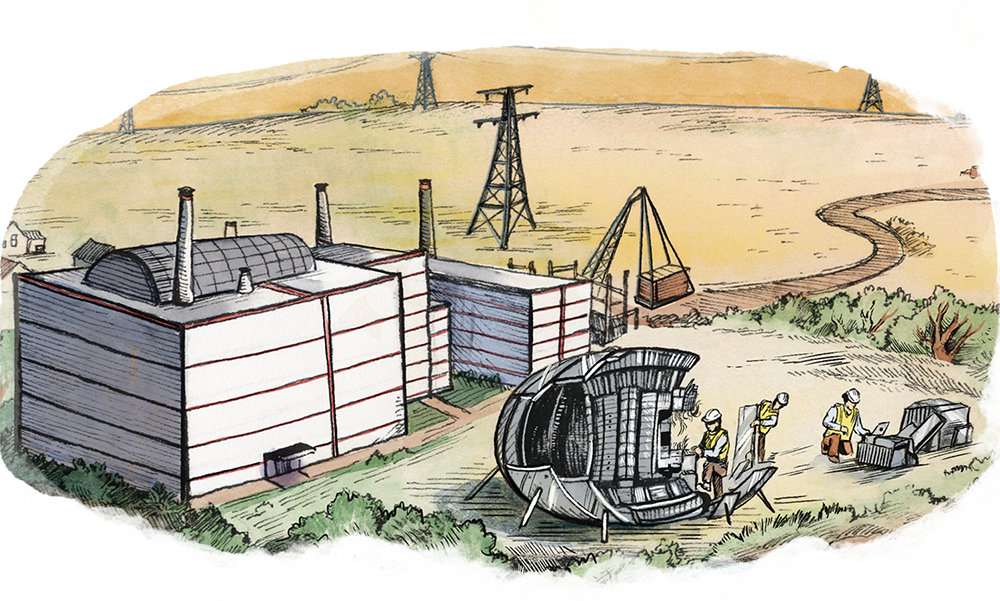

The now-famous USS Nautilus, the world’s first nuclear-powered submarine, was launched with Westinghouse’s light-water reactor in 1954. GE was slightly behind, with the USS Seawolf launching in 1955 with its sodium-cooled reactor.

In addition to being a year behind, GE’s reactor had serious disadvantages for submarines. Sodium explodes in water, making leaks particularly dangerous. As Pool recounts, “a month after launching, the Seawolf was tied up to the pier for reactor tests when a coolant leak caused two cracks in steam pipes…. Rickover didn’t dither.” He selected Westinghouse’s light-water design to power America’s submarine fleet.

Although Rickover’s passion was for submarines, he had also been pushing hard for expanding nuclear propulsion to aircraft carriers, preferably with the same reactor that would work well for submarines. But in 1952, as Westinghouse was nearing the start of its first reactor, the AEC had shifted its top priority to developing reactors for civilian use. The question then became whether to develop civilian reactors in a separate program, or a joint program with carrier reactors. A joint program could share expertise and resources, while two programs would have meant competing for them.

The new Eisenhower administration, intent on cutting federal spending, decided the matter by slashing the carrier idea. The AEC, which had been exploring an alternate reactor design for civilian use, also lost funds and was left with the light-water reactor as the only contender. Besides, demonstrating peaceful civilian use of nuclear power, and doing it faster than the Soviets, was critical, and the only proven reactor design would need to do the job. The reactors just had to be scaled up.

Under Rickover’s direction, the first civilian nuclear power plant, running on a light-water reactor, began full operation at Shippingport, Pennsylvania in 1957. But, as Pool reports, the AEC had much bigger plans, treating this as the first of many possible design types it would explore over the next decade, with different utilities trying out different designs.

But thanks to Shippingport, it was never an equal contest…. Rickover’s influence and the connection with the naval reactor program gave the light-water option a momentum that the others were never able to overcome.

Rickover and the AEC’s decisions led to what is now known as the “bandwagon economy” of the 1960s. With demand rising, GE and Westinghouse both offered fixed-price “turnkey contracts” for utilities to build nuclear plants. The companies would initially sell plants at a loss, but would accrue experience and reduce costs so that future plants would be profitable. The contracts were also cheaper per amount of power output for larger plants than for smaller ones. Furthermore, nuclear enthusiasm at the time made it seem like utilities that adopted nuclear were more forward-thinking than those that stuck with old-fashioned coal.

The incentives thus aligned for utility companies to build many reactors, as large and as quickly as possible. LWRs soon proliferated and became locked in. Other countries followed suit, largely abandoning gas-cooled reactors and other designs.

The selection of light-water reactors was thus historically contingent, and made by a highly centralized technocratic regime. The choice was made, and then locked in due to rapid scaling up, prior to gaining a reasonable understanding of the alternatives.

The use of LWRs for civilian power generation was initially no more than an extension of their use for submarines. Rickover himself exerted disproportionate control over both projects, and options for commercializing alternate designs were foreclosed by the intimate relationship between reactor builders, the military, and the federal government. Neither market forces nor public debate informed the choice of light-water reactors over other options.

Given how consequential was the choice of the reactor design for civilian nuclear power, the technocratic nature of this development process was unfortunate. While the LWR has proven spectacularly safe within America’s nuclear Navy, it has a more mixed history in civilian plants. Larger reactor cores have more hot radioactive material, but relatively less surface area to dissipate the heat. So the smaller units used by the Navy are less prone to meltdowns than civilian reactors, which demand constant cooling.

Without ongoing active intervention, large LWRs are like runaway trains heading toward disaster, presenting unique safety challenges for civilian nuclear plants. Moreover, naturally occurring, unenriched uranium cannot sustain a reaction in light water (another name for ordinary water) because it absorbs too many neutrons. Thus, the choice of light water as a moderator necessitates the use of enriched uranium as a fuel. This locks in a connection with enrichment facilities, and hence with the technologies used in producing nuclear weapons.

The centralization of the decision-making process meant that there was little input from engineers and from stakeholders with clear incentives to anticipate such problems. And it marginalized many of the groups whom it would most directly affect, such as the Yakama and Navajo peoples, who have borne the brunt of the harms of uranium mining and enrichment.

The democratic deficits of nuclear energy’s origins live on in the contemporary nuclear debate. Today, the Nuclear Regulatory Commission (NRC) is only slightly less technocratic than its predecessor, the Atomic Energy Commission. Bureaucrats and political appointees make decisions about research, safety regulations, how to respond to incidents, regulation enforcement and compliance, and transportation, with only indirect influence from the president or Congress.

Rare opportunities for substantive public involvement and influence come at NRC licensing hearings for building or extending operation of facilities that create or store nuclear waste. Even in these hearings, the selection of who participates is limited by vague and contradictory standing and admissibility rules that essentially leave the decision to the appointed NRC panel.

Sometimes these panels are lenient, like during the Yucca Mountain hearing, when members of the public who lived nearby were admitted even if they failed to exactly meet the requirements for judicial standing. Sometimes these panels are strict, like in the 2019 hearing deciding the fate of the west Texas temporary waste-storage facility. In this case, all but one member of the public was excluded on the basis of vague “contention admissibility” rules, even though many more who sought admission lived in the potentially affected area. But these rules are intentionally strict so as to keep hearings expert-driven, thereby limiting the public’s say.

This institutional inertia has also helped to ensure continued technological lock-in. Because private companies are unlikely to pour billions of dollars into researching new reactor designs that may never pan out, the federal government retains a central role in encouraging new developments.

But the U.S. government has yet to invest the necessary resources. In the 1950s, the AEC budgeted $200 million to develop five different experimental reactors over five years, about $2 billion in today’s dollars. For comparison, the Advanced Reactor Demonstration Program launched earlier this year by the Department of Energy was given a budget of only $230 million to support the development of two reactors. Even worse, the program makes no demands to prioritize alternatives to light-water designs, explicitly allowing both options. And we have yet to see how long Congress will maintain this program.

Although the NRC responded to the Fukushima disaster with a reassessment of reactor design and a series of recommendations to prevent a similar event in the United States, there was no effort to foster deeper public debate over the direction of nuclear energy. One would hope that, if anything, the threat of an accident like Fukushima might be enough to alter institutional behaviors. But unless one counts the ability to email the NRC for permission to listen in on one of their open meetings, they continue a technocratic process that is all but closed off to outsiders.

In 2016, the NRC introduced a strategy for “safely achieving effective and efficient non-light water reactor mission readiness,” promising a flexible licensing process. But according to a memo this January, only “six non-LWR developers have formally notified the staff of their intent to begin regulatory interactions.” This is a start, but without substantive public funding to explore a wide range of technological options — and without a fruitful public debate — it seems too much to hope that the NRC will pull nuclear energy out of the current morass. What, if anything, might be done to save it?

The technocratic development process of the light-water reactor produced public resistance that might have been avoided, and locked out alternate designs that might have been less prone to meltdown. As Joseph Morone and Edward Woodhouse argue, more disagreement, not less, might have saved nuclear power.

With a slower, more incremental, more circumspect development process, design flaws would have had time to emerge before embarrassing and highly visible failures. Instead of looking for good enough evidence to plunge ahead with one economical design and hoping to make it safe along the way, we might have sought a more inherently safe design and worked to make it more economical. A process that incentivized development pathways other than the light-water reactor might have better retained public trust.

The problem was not one of too much democracy, too much dissent, but too little.

Today’s scientistic nuclear energy debate risks repeating past mistakes, leading to one of two bad outcomes. Knee-jerk nuclear opposition continues to block experimentation with alternatives to the LWR. We might continue to be gridlocked indefinitely, leaving Americans stuck with outdated, expensive, risky nuclear reactors, and with unrealized potential to dramatically reduce carbon emissions and ameliorate climate change. On the other hand, the calls to plunge ahead at full speed with new reactor designs could yet win the day, with possibly disastrous results for democracy, human health, and the environment.

While the prospect of true “inherent safety” may remain a chimera for any complex technological system, what is known as passive safety may yet be a realizable goal. A traditional light-water reactor is by nature unstable: Without regular cooling, it will overheat — creating the possibility of a meltdown — which requires constant management to prevent.**

But there are other reactor designs that have the opposite property. China is building “pebble bed” reactors, in which helium cools a silo of graphite-clad billiard balls of uranium fuel. Pebble-bed reactors do not melt down when coolant fails but rather gradually cool themselves. Imagine if passenger jets floated safely back to the ground after even the most catastrophic of failures. Hence the term “passive” safety.

Many designs for small modular reactors, including some advanced types of light-water reactor, also hold the promise of being passively safe. These include GE Hitachi’s BWRX-300 and the Westinghouse SMR, both LWRs that can cool themselves in the event of an accident. Russia, India, Pakistan, and China are already operating small-scale nuclear reactors, with several other countries considering construction of such designs. Other non-LWR designs, such as molten-salt reactors, go back many decades and have been pursued by several countries as safer alternatives, although none are yet in use. We don’t lack feasible technical alternatives to current LWRs but the overarching political structure to make them reality.

Passive safety features can only take us so far toward a more inherently safe reactor. Radiation leaks are still possible from many of these designs, leaving their overall safety profile a function of the social and technical systems of which they are a part. But perhaps, like coal-fired power plants, it would be demonstrably impossible for these new reactors to suffer the most devastating catastrophe, a meltdown.

We could yet live in a world in which the safety profile of nuclear technology is markedly different from how we understand it today — but only if we overcome the policies and political mindset that have kept the United States unable to follow through on commercializing alternatives to light-water reactors.

How, then, might we steer between disaster and morass?

Technology policy scholars David Collingridge and Edward Woodhouse have described a central epistemic challenge of any question about governing a complex technology. Given that no one is capable of ascertaining the relevant facts completely or objectively, reliable knowledge of a new technology cannot be the product of prior study, but only of experience. Societies, therefore, can only hope to proceed intelligently with developing new technologies through a collective process of trial and error.

What might this process look like? Consider a case study from Denmark. In response to the oil crisis in the 1970s, the country, in parallel with the United States and Germany, created centralized, government-directed programs to develop wind power. Much like the development of the light-water reactor in the United States, all three nations focused on scaling up their programs as rapidly as possible. All eventually failed, locking in a small number of windmill designs that by the 1980s still did not run reliably.

But in Denmark, the story did not stop there. While the national R&D program stumbled, amateurs and artisans were growing a domestic market. By 1980, the carpenter Christian Riisager had used parts off the shelf to build and sell over fifty 22-kilowatt turbines, each able to meet the power needs of roughly five U.S. homes today.

Riisager’s success encouraged other craftspeople to enter the market. When the Danish government began offering wind-energy investment credits, demand increased. Artisans couldn’t produce fast enough, and small-scale agricultural manufacturers bought their patents, began producing, and slowly refined and scaled up the designs.

In order to be eligible for credits, wind turbines had to be licensed, which in turn required testing at a government-funded facility. The testing facility gave small manufacturers access to engineering expertise, adding sophistication on top of the reliable designs already developed through a trial-and-error learning process.

To further enhance learning, Danish wind companies founded an association of turbine owners and manufacturers, which had a monthly journal. Now they could all share advancements with one another.

By the time of the California wind boom in the 1980s, Danish companies could offer turbines that, albeit less powerful, were far more reliable and economical than any other on the global market. Participating in this market fueled further trial-and-error learning, and by 1989 the Danes were producing reliable 500-kilowatt turbines, each of which could power well over a hundred American homes today.

By the early 2000s, Danish companies controlled 45 percent of the global wind turbine market. This success cannot be credited to the Danes’ own federal research effort, which largely produced failures, but rather to diverse participation in a publicly supported turbine industry that fostered incremental design evolution. These artisan-scale Danish builders constructed the best wind turbines of the era, largely outclassing the NASA-led, multimillion-dollar efforts of the United States.

Could replicating certain aspects of the Danish experience improve the prospects for better, more trusted nuclear reactor designs?

The Danish story exemplifies an approach that political scientists call intelligent trial and error, or ITE. A framework for better managing the development of risky new technologies, intelligent trial and error is the most promising way to get out of our nuclear impasse — and perhaps our climate impasse too. It is what political scientism claims to demand — a scientific approach to policy — but it avoids the brittleness and centralization of technocratic control.

In conventional technology assessment, the goal of policymakers is to analyze the risks posed by a technology, and then come up with policy ideas for dealing with those risks. While this framework presents itself as value-neutral and scientific, it is a form of technocratic scientism: It puts decisions in the hands of expert analysts who consider only a narrow body of facts that have been produced within an unquestioned set of assumptions and values.

Intelligent trial and error, by contrast, does not claim to arrive at the neutral truth before acting. Instead, it aims to enhance learning and flexibility through the development process itself.

There are two key questions for any risky technological undertaking:

In place of plunging ahead with mass production of a single supposedly optimal design, ITE aims for incrementalism. In place of ideological uniformity, it incorporates diversity of value and perspective. In place of entrenching technological systems, it aims for ongoing nimbleness.

Embracing intelligent trial and error for nuclear power would mean creating a new R&D program to develop alternatives to the light-water reactor. This initiative would require appropriately slow pacing and limited scale. Slow, small-scale experimentation is necessary to ensure that problems are not missed, that learning is not achieved too late, after a technological infrastructure is too costly to alter.

The complexity of light-water reactors, the speed with which the technology was locked in, the size and cost of new plants, and the often decades-long process of designing and permitting have made it exceptionally difficult to learn from errors and to flexibly innovate to correct them, or to switch to alternate designs. Among many other features, an intelligent trial and error approach would likely find several problems solved at once by aiming to develop reactors that are small, cheap, and numerous, rather than large, expensive, and few.

Technocracy produces gridlock by invoking the name of science to short-circuit political conflict. By contrast, intelligent trial and error recognizes that action becomes possible only by engaging in the very stuff of politics: by locating where our values and interests overlap, and developing policy options to which most citizens can give at least partial assent.

Just as important as the trial and error of ITE is the intelligence. Not only does engaging in real political conflict make true democracy possible by including a broad set of perspectives, but it also allows for more intelligent outcomes by better anticipating mistakes. Alvin Weinberg, for instance, attributed the existence of protective containment vessels at American reactors — something completely absent from Soviet designs — to the United States allowing at least some public influence on nuclear decision-making.

Or consider the case of the Oxitec genetically modified mosquitoes. In 2011, when the biotechnology company wanted to test its mosquitoes designed to reduce the spread of dengue fever, it promoted deliberation within the communities of its test site in the state of Bahia, Brazil. The public’s initial reticence was replaced with enthusiasm, and the experiment was successful, shrinking the mosquito population in the test area by 85 percent over a year.

But when Oxitec attempted to do the same in Key West, Florida, the company pursued FDA approval without first engaging the community. So instead of enthusiasm it was met with a protest petition, signed by over a hundred thousand residents. Democratic deliberations likely would have helped to prevent the impasse.

The case of Juanita Ellis and the Comanche Peak nuclear plant offers crucial lessons about the benefits of broad participatory inclusion in deliberations.

Robert Pool tells the story. In the early 1970s, Westinghouse began construction on two light-water reactors, intended to be run by Texas Utilities Electric at a new power plant in Somervell County, about eighty miles southwest of downtown Dallas. At the time, Juanita Ellis was a church secretary and housewife who had never finished junior college. She would soon become a master of the ins and outs of nuclear regulation, holding TU Electric accountable for years until the plant eventually came online.

Ellis’s transformation into a regulatory force of nature began when she read an article in a local gardening magazine about the 1957 Price–Anderson Act, which provided federal accident insurance to nuclear reactor operators. She was disturbed to learn about the danger of nuclear power plants, and partnered with the author of the article and four friends to found the Citizens Association for Sound Energy, or CASE.

In the United States, nuclear power plant hearings are run in a similar manner to a trial. The Atomic Safety and Licensing Board, composed of three members, hears testimony from the Nuclear Regulatory Commission, the utility company, and any members of the public who request to participate, known as interveners. As such, the only parties guaranteed participation are the NRC and the utility. While utilities have millions of dollars and a large staff of legal and technical experts, interveners are typically public-interest organizations relying on donations and volunteers. Detailed engineering testimony can take years, and the adversarial structure of the hearings pits interveners against the utilities, a contest in which interveners are at a huge disadvantage.

While CASE was one of three interveners at the outset of the Comanche Peak hearings in 1981, a year later it was the only one that hadn’t dropped out, making it the sole representative for the public in the licensing of the plant. Utility officials were optimistic at this turn of events, dismissing Ellis. They were wrong.

That same year, a former Comanche Peak engineer came to Ellis with important information. The engineer had found defective pipe supports for the plant, some of which are essential for maintaining the flow of cooling water to the core. Without cooling water, the plant would face meltdown. His bosses had dismissed his discovery, and he quit.

Based on information from him and other whistleblowers at the plant, CASE showed up to the hearings with a 445-page brief about the pipe supports. The licensing board suspended the hearings so the NRC could investigate. The NRC found no major problems and gave the plant a green light to address the errors and proceed. But the licensing board sided with Ellis, forcing TU Electric to endure a new inspection of its plant.

The true scope of the problems only gradually came to light. According to a 1987 article in a local magazine, inspectors found over five thousand defective pipe supports, two hundred weak seals, a broken condenser, and a culture of covering up reports of poor quality control systems.

Technical redesigns were not enough; TU Electric needed a change in leadership. The new manager, seeking to move beyond an adversarial relationship with the public, brought a cooperative strategy instead. He approached Ellis, keeping her informed about the utility’s activities and seeking a deal: She would stop opposing Comanche Peak’s operating license, and he would compensate CASE for the cost of the hearings and would publicly read a letter acknowledging the utility’s past mistakes. More importantly, TU Electric would integrate CASE into the safety process: involvement in inspections, access to the plant on a 48-hour notice, and a seat on the plant’s safety board.

Ellis accepted — at the cost of becoming an outcast of the anti-nuclear movement that had seen her as its champion.

The story of Juanita Ellis demonstrates that a non-adversarial model for engaging the public is possible, and that public inclusion need not generate NIMBYism. Since coming online in 1990, Comanche Peak has retained a clean safety record, and plans to run for thirty more years.

Juanita Ellis’s intervention resulted in a more intelligent outcome, uncovering serious safety issues that the plant’s technocratic overseers lacked the proper incentives to discover and address. Her work ultimately strengthened the public buy-in and democratic legitimacy of the plant. Moreover, her relationship with the whistleblowing engineers was symbiotic. Whistleblowers need public partners to drive the political process, while public advocates need the assistance of experts.

But we should not want to make this story the norm. For it also demonstrates the limitations of the adversarial model through which the public is usually forced to participate.

Had Ellis not seen her reservations about the nuclear plant through, her fears may well have been realized. Though she may have averted a devastatingly costly and deadly accident, her advocacy delayed operation of the plant by six years, bringing its final price tag to $11 billion, up from the original $780 million. Had she and other interested members of the public been included from the outset, the delay and expense might well have been avoided.

Nuclear energy’s fall from grace in the United States was not brought on mainly by public ignorance or misguided environmentalists, but by advocates shielding the technology and themselves from legitimate criticism. As Joseph Morone and Edward Woodhouse argue, the exclusion of non-experts played no small part in the loss of legitimacy for nuclear power. Not only is increased inclusion good for democracy, but it could finally allow nuclear power to help break the climate stalemate.

What we need, then, is at last to democratize nuclear development. What we propose is the creation of participatory bodies in which citizens with diverse interests engage in the intelligent trial-and-error process.

How might such groups deliberate? There are several models already available. New England town halls go back over four hundred years. Consensus conferences, citizens’ juries, and participatory technology assessments are all practices designed to enhance public deliberation. Many parliaments in Europe use what are known as Enquete Commissions (inquiry commissions), composed of experts and political appointees selected in proportion with parliamentary parties, to resolve technological governance questions that are especially complex or politically intractable. In the United States, new bodies modeled on these examples could be formed to reboot the nuclear development process.

Nuclear energy also failed in part because it was privatized too soon. Westinghouse and General Electric acted entirely reasonably when they chose light-water designs they already knew from their defense work, and for which they had received billions in federal funding. Large, highly expensive designs will likely continue to be poor candidates for intelligent development by private industry alone. And the inherent instability of traditional light-water reactor designs makes them particularly resistant to trial-and-error learning; even after decades of experience, we are still caught off guard by near misses.

As alternative reactors enter the commercialization stage, we would want to avoid the lock-in of a suboptimal design, maintain adequate democratic oversight, and ensure a gradual scaling up of the technologies so as to enable an intelligent learning process with enough time to respond to mistakes. It will require a fine balance to keep the process diverse enough to achieve real participation but also coordinated enough to achieve steady innovation.

While there are many possible ways to ensure these characteristics, what we propose is a quasi-market structure: Firms would commercialize designs while the federal government acts as a literal investor, purchasing shares and the equivalent votes on the board. This structure would ensure some degree of meaningful democratic control via participatory mechanisms throughout the commercialization process.

Ensuring that the technological development process remains flexible and well-paced will require a broader cultural recognition that complex technological development is less a form of “listening to the science” than it is of learning.

This shift away from political scientism will mean involving scientists and engineers in a different way than we are used to. Experts would serve less as arbiters of truth than as what Roger Pielke, Jr. calls “honest brokers.” Honest brokers do not mainly use expertise to adjudicate conflicts, but to clarify and expand the range of alternatives being considered, to highlight what we do not yet know. Rather than closing down potential options by invoking what “science says,” they aim to facilitate a diversity of new options. The cooperative relationship between experts and citizens in turn enhances the learning process.

Too many advocates of nuclear power are wary of true democratic participation. Wouldn’t including ordinary people simply draw in the most cantankerous and fanatical among them? But this objection does not point to a problem with democracy per se but with how poorly we implement it. NIMBYism is not the cause of nuclear power’s demise, but a symptom of deliberative processes that are not transparent or inclusive enough to foster trust. Collaborative arrangements foster not fanatical resistance, as technocrats fear, but negotiation and compromise.

When the barriers to democratic participation are high, and the cost in time and money steep, it is no wonder that those with the strongest convictions dominate. But forums that encourage participation by a broader group of interests actually temper the influence of extremists.

These deliberative processes would also be more likely to advance the actual quality of debate. In contrast to the view of democracy as the tallying of citizens’ unreflective preferences, participatory bodies and intelligent trial and error emphasize the tentativeness of each member’s perspective and of any disagreement among them, the ability for knowledge to grow and values to evolve. Citizens with diverse viewpoints working closely with honest brokers harness the strength of robust disagreement: creative thinking. By fostering dissent, participatory deliberation also fosters new solutions to problems while leaving the development process flexible and ongoing. It avoids lock-in and the gentle tyranny of past decision-makers over present ones.

During Covid, The New Atlantis has offered an independent alternative. In this unsettled moment, we need your help to continue.

A fair and prudent deliberation process must also leave open the possibility to decide to eventually limit, reduce, or eliminate the use of nuclear power, whether to meet a particular region’s power needs or the entire country’s. In some cases, it may be cheaper to eliminate coal plants by creating programs to increase energy efficiency and decrease consumption, rather than replacing them with other power sources. Moreover, citizens might simply prefer the benefits of energy-reducing programs, like better public transportation and easier mobility in denser cities, even if they end up being more expensive than replacing coal with nuclear. Leaving open this possibility would allow for the fullest level of inclusivity, and would recognize that external conditions and participants’ own values may change in the deliberation process. And it would acknowledge that an inclusive deliberation process itself is not guaranteed to produce a reactor design safe enough to satisfy most participants.

Both ascetic visions of relinquishment and grand technocratic visions of high-powered nuclear futures may obscure more prosaic but practical and democratic pathways. There is a difference between speed and haste. Rushing ahead with nuclear power to valiantly battle climate change would be no victory at all if that giant leap forward is followed by two leaps back. But it would also be senseless for nuclear power to remain forever frozen in amber by the decades-ago failures of the American debate.

Man plans and God laughs. With the aid of cutting-edge computer models, statistical analyses, and stacks of scientific studies, the belief that the Truth is within our grasp and can provide us with the unequivocally right decision becomes an alluring one. Once accepted, it is easy to see democracy and disagreement as mere impediments, obstacles to realizing the world as it was always meant to be. All that stands in the way of experts ushering in a brilliant future for ordinary people is — well, ordinary people.

Or so we hear again and again from the prophets of political scientism. But decades of experience show that this model only reduces the legitimacy of decisions by excluding ordinary people’s valid worries and objections, their legitimate definitions of progress, safety, and the good. Rather than countering populism, as so many experts say they want to, this model only fuels the view of government as elitist, unresponsive, and self-serving.

Political scientism has its basis in the idea that our problems are soluble through the rote application of technical knowledge because technology’s traits are uncomplicated, definite, and can be known with total certainty. Prior to the crashes of Lion Air Flight 610 and Ethiopian Airlines Flight 302, it would have been uncontroversial to point to statistics and tout the “inherent safety” of air travel. No one knows exactly what Boeing 737 MAX engineers and managers were thinking when they decided to use a single angle-of-attack sensor, known to be error-prone, without a backup or even informing pilots, to compensate for an unstable design. Complacency in light of a history of success may have been part of it.

But decision-making at Boeing was also so compartmentalized that no one was capable of fully grasping the consequences of the design. And the FAA, perhaps also overly confident following years of good performance, reduced fruitful disagreement by delegating much of the responsibility to test and certify the safety of the 737 MAX’s software to Boeing itself. Even decades of high safety performance did not save Boeing and the FAA from repeating the kinds of managerial failures that lead to disaster in risky industries.

Cases like this show how precarious are many of our technological achievements, how much they depend on getting the sociopolitical facets right — and keeping them that way. The achievement of safety for a complex technology is hard won but easily lost.

Perhaps it is utopian to think that an intelligent trial and error framework for developing nuclear energy would resolve these problems, or that it could even be implemented at all in today’s political climate. But the wreckage of the alternative is already plain. Fanaticized by stories about “the facts,” governance of new technology is increasingly unproductive, divisive, and dumb. All but the most extreme positions are excluded, and those who remain rail against their opponents as ignorant, or gripped by cognitive bias.

The irony is that the cultural elevation of science over democracy actually limits our ability to solve political problems in a scientific way. And it degrades the open, pluralistic debate on which democracy prides itself. Only by directly engaging in the uncertainties, complexities, and underlying value disagreements that define our collective problems can we begin to repair the damage, to put science back in service of democracy, and democracy in service of science.