Any time you walk outside, satellites may be watching you from space. There are currently more than 8,000 active satellites in orbit, including over a thousand designed to observe the Earth.

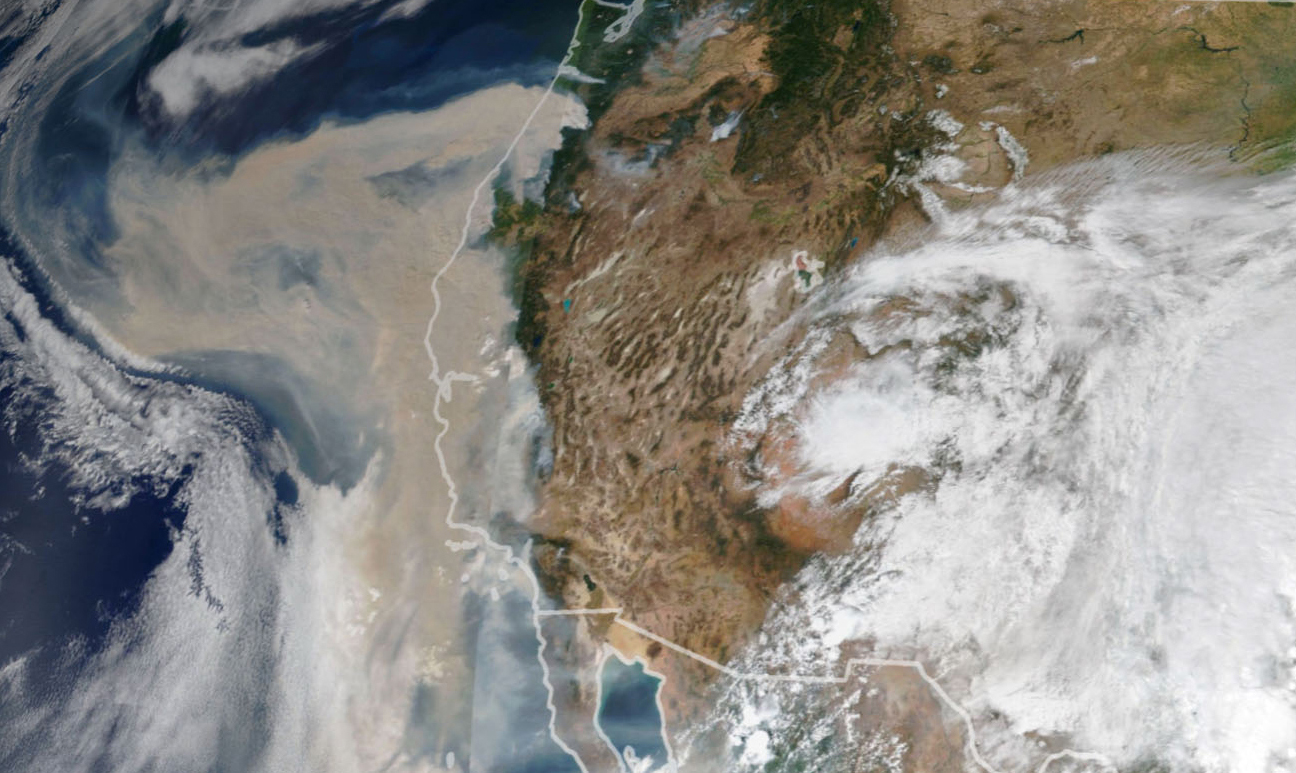

Satellite technology has come a long way since its secretive inception during the Cold War, when a country’s ability to successfully operate satellites meant not only that it was capable of launching rockets into Earth orbit but that it had eyes in the sky. Today not only governments across the world but private enterprises too launch satellites, collect and analyze satellite imagery, and sell it to a range of customers, from government agencies to the person on the street. SpaceX’s Starlink satellites bring the Internet to places where conventional coverage is spotty or compromised. Satellite data allows the United States to track rogue ships and North Korean missile launches, while scientists track wildfires, floods, and changes in forest cover.

The industry’s biggest technical challenge, aside from acquiring the satellite imagery itself, has always been to analyze and interpret it. This is why new AI tools are set to drastically change how satellite imagery is used — and who uses it. For instance, Meta’s Segment Anything Model, a machine-learning tool designed to “cut out” discrete objects from images, is proving highly effective at identifying objects in satellite images.

But the biggest breakthrough will likely come from large language models — tools like OpenAI’s ChatGPT — that may soon allow ordinary people to query the Earth’s surface the way data scientists query databases. Achieving this goal is the ambition of companies like Planet Labs, which has launched hundreds of satellites into space and is working with Microsoft to build what it calls a “queryable Earth.” At this point, it is still easy to dismiss their early attempt as a mere toy. But as the computer scientist Paul Graham once noted, if people like a new invention that others dismiss as a toy, this is probably a good sign of its future success.

This means that satellite intelligence capabilities that were once restricted to classified government agencies, and even now belong only to those with bountiful money or expertise, are about to be open to anyone with an Internet connection.

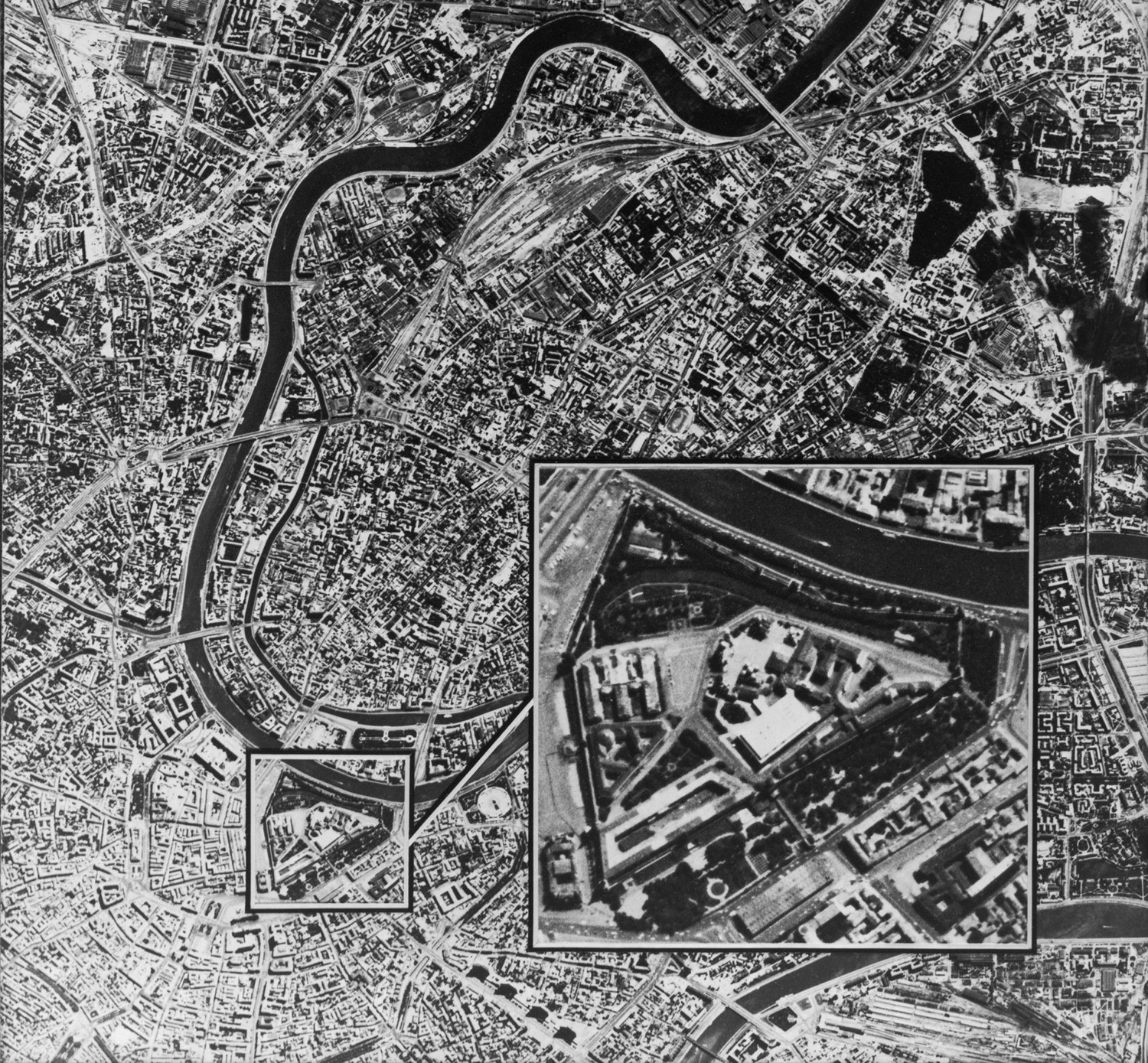

Less than a year after the Soviet Union launched the first manmade satellite, Sputnik 1, in October 1957, President Dwight D. Eisenhower authorized Corona, a CIA program to develop satellite reconnaissance capabilities that could compete with the USSR. By August 1960, he received the first satellite image of Soviet airfields. At the time, the Air Force, Navy, and CIA were running rival satellite programs. But after the initial success of Corona, there was a push to streamline management and tighten security, and the National Reconnaissance Office was founded in secret in 1961. Its existence was declassified in 1992, and it continues to be America’s primary agency running satellite reconnaissance operations.

Since the beginning of U.S. satellite surveillance, its primary use has been to gather intelligence on enemy capabilities and verify that America is not being lied to, especially with regard to nuclear weapons. During the Cold War, when the very existence of U.S. satellite reconnaissance was secret, treaties even included euphemistic provisions for satellite surveillance. For example, in the Anti-Ballistic Missile Treaty of 1972, the United States and the Soviet Union agreed that each party would use “national technical means of verification” — meaning first and foremost satellites — to monitor the other side.

By that time, satellite technology had already significantly improved. Early imaging satellites had collected data on rolls of film that had to be dropped back to Earth and developed. But beginning in 1972, NASA, together with the United States Geological Survey, launched what came to be known as Landsat satellites, which used Multispectral Scanners to detect and measure a broad spectrum of light. They transmitted this data back to Earth almost in real time, where it was processed and recorded as images.

Although the first such satellites were developed for geological observation, the National Reconnaissance Office did not take long upgrading to them. By 1977, satellites were collecting so much reconnaissance data that a retired intelligence officer observed: “a satellite circling the world … will pick up more information in a day than the espionage service could pick up in a year.” All this reconnaissance data was hidden, however, and remained classified throughout the Cold War. And while Landsat geological images were available to civilians, they were expensive and hard to come by. In the 1990s, that changed.

In 1992, Congress passed the Land Remote Sensing Policy Act, allowing commercial companies to operate satellites and sell the data they compiled. The commercial space industry took off. Aided by huge advances in computing power, the rise of the Internet, and the increasing demand for mobile telephone services, commercial satellites became big business.

Not all aspects of the satellite industry became commercialized at the same time. The U.S. Department of Commerce began licensing commercial Earth observation companies right off the bat. But, citing national security concerns, the Department of Defense and the intelligence community long objected to the commercial licensing of satellites that used Synthetic-Aperture Radar (SAR). This type of radar can produce clear images even at night or through smoke, making it invaluable for military reconnaissance. The first commercial U.S. license to operate SAR satellites was granted in 2015 to XpressSAR, a Virginia company that plans to launch its satellites in 2024. Because the U.S. government resisted commercialization for so long, other countries have met growing international demand. For example, the Finnish satellite company ICEYE signed a contract in August 2022 to supply Ukraine with SAR imaging capabilities for its war with Russia.

Despite growing commercialization, federal contracts still make up the bulk of the satellite imagery market. But commercial satellite operators have been diversifying their customer base. Current customers include investment firms seeking to gauge industrial activity of the companies they are tracking (such as cars in parking lots or ships entering ports), agricultural companies monitoring their crops, and mining companies assessing changes in elevation in open-pit mines. And the industry is growing as the cost of launching satellites into space has been rapidly decreasing.

But this is only half the story. For data to be worth anything, it has to be interpreted. And recent innovations in machine learning technology make it easier to analyze satellite data than ever before.

Satellite image interpretation used to be painstaking manual work. During the Cold War, analysts identified objects of interest by poring over images that were not especially high in resolution. The first U.S. satellite images taken over the Soviet Union in 1960 showed clear objects down to about 40 feet in size; within a decade, the number was 6 feet. Compare this to the images the cameras on U-2 spy planes offered, most famously during the Cuban Missile Crisis in 1962, showing objects as small as 2.5 feet. Imagine having to distinguish, in even the best satellite images at the time, between a weapon of mass destruction, a defensive missile, and a harmless speck.

Much of this interpretive work can now be automated with machine learning, allowing for analysis of much higher volumes of information in a fraction of the time. This shift opens up whole new fields of analysis.

For example, researchers at Ohio State University combined daily high-resolution satellite images of tropical forests with a global map of land cover to create a large data set of images precisely located in space and time. They then trained a deep-learning model on the data, so that it can track changes in forest cover over time. This is impressive, but it also illustrates a limitation in the current state of the art: For now, researchers have to train their models on large amounts of data specific to the task they are trying to complete. Each new task requires a new model.

This problem is gradually getting solved. One step in that direction is Meta’s launch of the Segment Anything project earlier this year. Rather than having to train a specialized model to detect a particular type of object in an image, like trees in a forest or shipping containers in a port, generalized models like Segment Anything may soon allow researchers to identify any objects of interest using an off-the-shelf toolkit.

But the biggest new development in machine learning is the rise of large language models, or LLMs. ChatGPT, the most impressive and user-friendly AI chatbot employing this technology, produces genuinely responsive, if not entirely reliable, answers to written prompts, leading to an explosion of applications, including database queries. One of these, textSQL, has been integrated into the user interface CensusGPT, whose website offers a search bar inviting you to “ask anything about US crime, demographics, housing, income….” Programs like this, when they work, make it possible for users to skip all the cumbersome technical steps involved in database analysis and get straight to an answer.

LLMs may also become a powerful tool for interpreting satellite data. In April, the satellite company Planet Labs gave a demo titled “Queryable Earth,” in which a researcher used a Microsoft AI model to query Planet Labs’ satellite imagery of California, along with its data on variations in the images over time. The researcher typed “How much tree canopy cover was lost in the Camp Fire?,” the massive 2018 wildfire in California. On the left side of the screen, the chatbot answered “The Camp Fire resulted in a loss of 114.4 square kilometers of tree canopy cover. Tree canopy cover refers to the layer of leaves, branches, and stems of trees that cover the ground when viewed from above.” On the right side of the screen, the page displayed a satellite image of the affected region after the fire.

Not to be outdone, individual programmers have also developed open-source tools to query satellite data, building apps like satGPT that can search satellite imagery using natural language queries. SatGPT’s demo on Twitter this summer was less polished than Planet Labs’, but it demonstrated an equally impressive technical feat.

The fusion of large language models with satellite data is still in the proof-of-concept stage, as Planet Labs admits. But this step promises to make satellite data dramatically more accessible soon.

In the Cold War era, government analysts gazed intently at classified satellite images. Today, data scientists need special training and coding skills to explore huge data sets produced by private satellite companies. In the future, we all may be able to query planetary data as easily as we use Google Maps.

Still, there are technical and legal barriers to accessing the best images possible and making sense of them. Building data sets that are truly comprehensive and amenable to querying in chatbot fashion will take time. And it will take more advanced, more generalized imaging models to build sufficiently powerful querying tools.

There are also legal restrictions limiting private access to the highest-quality satellite data, though the restrictions are loosening. In 2014, the U.S. government dropped its limit on legal commercial image resolution from 50 centimeters to 25 — meaning that objects as small as ten inches became visible under the new regulation. This August, the limit was dropped to 16 centimeters, a little over six inches. How much more fine-grained the government’s own restricted images might be remains a secret, although, thanks to a careless tweet of a satellite image by President Trump in 2019, some have estimated that U.S. spy satellites may capture objects as small as four inches, or perhaps even smaller.

In just thirty years since the U.S. government allowed commercialization of satellite technology, the private industry has developed by leaps and bounds. It boggles the mind that soon all of us may be able to see objects from space as small as a hand, and query the Earth from our pockets.

Lars Erik Schönander is a policy technologist at the Foundation for American Innovation.

June 10, 2016

Exhausted by science and tech debates that go nowhere?