I encourage you to read Adam Greenfield’s analysis of Uber and its core values — it’s brilliant.

I find myself especially interested in the section in which Greenfield explores this foundational belief: “Interpersonal exchanges are more appropriately mediated by algorithms than by one’s own competence.” It’s a long section, so these excerpts will be pretty long too:

Like other contemporary services, Uber outsources judgments of this type to a trust mechanic: at the conclusion of every trip, passengers are asked to explicitly rate their driver. These ratings are averaged into a score that is made visible to users in the application interface: “John (4.9 stars) will pick you up in 2 minutes.” The implicit belief is that reputation can be quantified and distilled to a single salient metric, and that this metric can be acted upon objectively….

What riders are not told by Uber — though, in this age of ubiquitous peer-to- peer media, it is becoming evident to many that this has in fact been the case for some time — is that they too are rated by drivers, on a similar five-point scale. This rating, too, is not without consequence. Drivers have a certain degree of discretion in choosing to accept or deny ride requests, and to judge from publicly-accessible online conversations, many simply refuse to pick up riders with scores below a certain threshold, typically in the high 3’s.

This is strongly reminiscent of the process that I have elsewhere called “differential permissioning,” in which physical access to everyday spaces and functions becomes ever-more widely apportioned on the basis of such computational scores, by direct analogy with the access control paradigm prevalent in the information security community. Such determinations are opaque to those affected, while those denied access are offered few or no effective means of recourse. For prospective Uber patrons, differential permissioning means that they can be blackballed, and never know why….

And here’s the key point:

All such measures stumble in their bizarre insistence that trust can be distilled to a unitary value. This belies the common-sense understanding that reputation is a contingent and relational thing — that actions a given audience may regard as markers of reliability are unlikely to read that way to all potential audiences. More broadly, it also means that Uber constructs the development of trust between driver and passenger as a circumstance in which algorithmic determinations should supplant rather than rely upon (let alone strengthen) our existing competences for situational awareness, negotiation and the detection of verbal and nonverbal social cues.

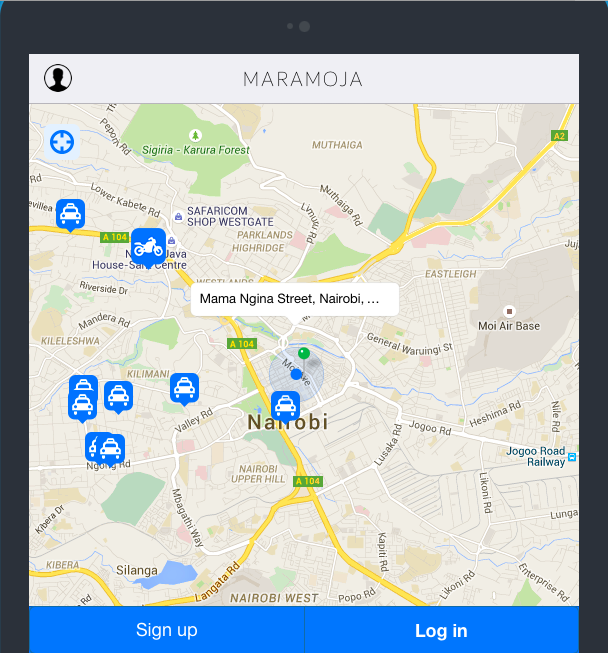

Contrast this model to that of MaraMoja Transport, a new company in Nairobi that matches drivers with riders on the basis of personal trust. Users of MaraMoja compare experiences with those of their friends and acquaintances: if someone you know well and like has had a good experience with a driver, then you can feel pretty confident that you’ll have a good experience too. But of course some of your friends will have higher risk tolerances than others; some will prefer speed to friendliness, others safety above all… It’s a kind of multi-dimensional sliding scale, in which you’re not just handed a single number but get the chance to consider and weigh multiple factors.

MaraMoja also rejects Uber’s infamous surge-pricing model in favor of a fixed price based on journey length. So, all in all, like Uber — but human and ethical.