Facts, like telescopes and wigs for gentlemen, were a seventeenth-century invention.

How hot is it outside today? And why did you think of a number as the answer, not something you felt?

A feeling is too subjective, too hard to communicate. But a number is easy to pass on. It seems to stand on its own, apart from any person’s experience. It’s a fact.

Of course, the heat of the day is not the only thing that has slipped from being thought of as an experience to being thought of as a number. When was the last time you reckoned the hour by the height of the sun in the sky? When was the last time you stuck your head out a window to judge the air’s damp? At some point in history, temperature, along with just about everything else, moved from a quality you observe to a quantity you measure. It’s the story of how facts came to be in the modern world.

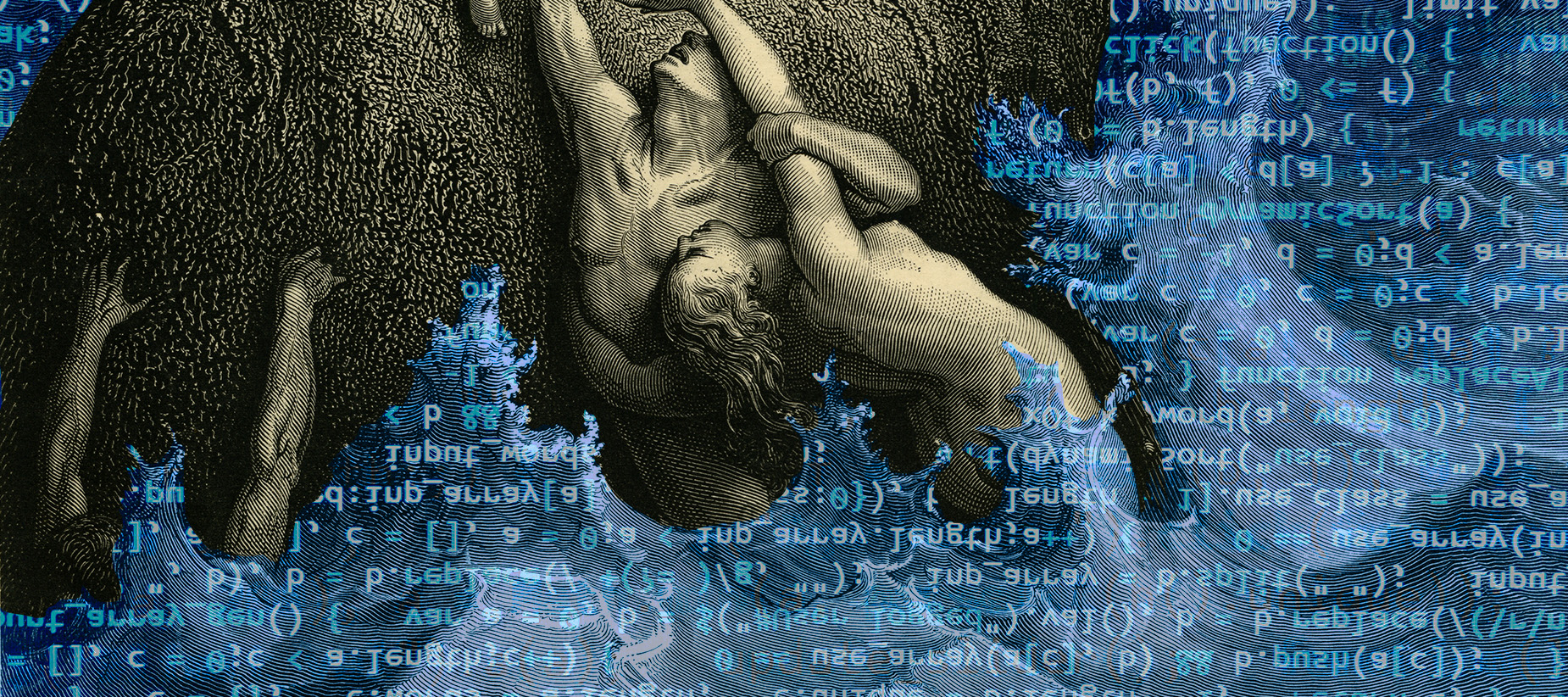

This may sound odd. Facts are such a familiar part of our mental landscape today that it is difficult to grasp that to the premodern mind they were as alien as a filing cabinet. But the fact is a recent invention. Consider temperature again. For most of human history, temperature was understood as a quality of hotness or coldness inhering in an object — the word itself refers to their mixture. It was not at all obvious that hotness and coldness were the same kind of thing, measurable along a single scale.

The rise of facts was the triumph of a certain kind of shared empirical evidence over personal experience, deduction from preconceived ideas, and authoritative diktat. Facts stand on their own, free from the vicissitudes of anyone’s feelings, reasoning, or power.

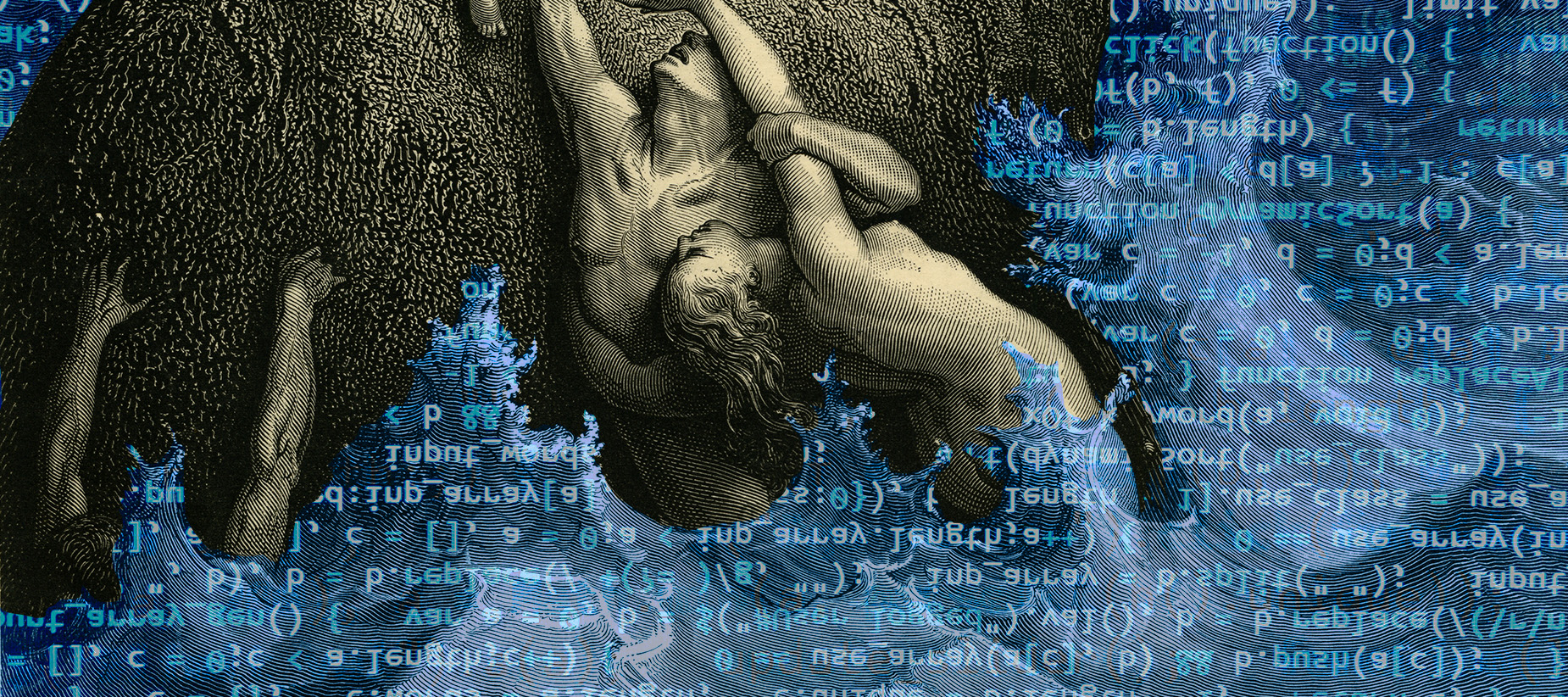

The digital era marks a strange turn in this story. Today, temperature is most likely not a fact you read off of a mercury thermometer or an outdoor weather station. And it’s not a number you see in the newspaper that someone else read off a thermometer at the local airport. Instead, you pull it up on an app on your phone from the comfort of your sofa. It is a data point that a computer collects for you.

When theorists at the dawn of the computer age first imagined how you might use technology to automate the production, gathering, storage, and distribution of facts, they imagined a civilization reaching a new stage of human consciousness, a harmonious golden age of universally shared understanding and the rapid advancement of knowledge and enlightenment. Is that what our world looks like today?

You wake to the alarm clock and roll out of bed and head to the shower, checking your phone along the way. 6:30 a.m., 25 degrees outside, high of 42, cloudy with a 15 percent chance of rain. Three unread text messages. Fifteen new emails. Dow Futures trading lower on the news from Asia.

As you sit down at your desk with a cup of Nespresso, you distract yourself with a flick through Twitter. A journalist you follow has posted an article about the latest controversy over mRNA vaccines. You scroll through the replies. Two of the top replies, with thousands of likes, point to seemingly authoritative scientific studies making opposite claims. Another is a meme. Hundreds of other responses appear alongside, running the gamut from serious, even scholarly, to in-joke mockery. All flash by your eyes in rapid succession.

Centuries ago, our society buried profound differences of conscience, ideas, and faith, and in their place erected facts, which did not seem to rise or fall on pesky political and philosophical questions. But the power of facts is now waning, not because we don’t have enough of them but because we have so many. What is replacing the old hegemony of facts is not a better and more authoritative form of knowledge but a digital deluge that leaves us once again drifting apart.

As the old divisions come back into force, our institutions are haplessly trying to neutralize them. This project is hopeless — and so we must find another way. Learning to live together in truth even when the fact has lost its power is perhaps the most serious moral challenge of the twenty-first century.

Our understanding of what it means to know something about the world has comprehensively changed multiple times in history. It is very hard to get one’s mind fully around this.

In flux are not only the categories of knowable things, but also the kinds of things worth knowing and the limits of what is knowable. What one civilization finds intensely interesting — the horoscope of one’s birth, one’s weight in kilograms — another might find bizarre and nonsensical. How natural our way of knowing the world feels to us, and how difficult it is to grasp another language of knowledge, is something that Jorge Luis Borges tried to convey in an essay where he describes the Celestial Emporium of Benevolent Knowledge, a fictional Chinese encyclopedia that divides animals into “(a) those that belong to the Emperor, (b) embalmed ones, (c) those that are trained, … (f) fabulous ones,” and the real-life Bibliographic Institute of Brussels, which created an internationally standardized decimal classification system that divided the universe into 1,000 categories, including 261: The Church; 263: The Sabbath; 267: Associations. Y. M. C. A., etc.; and 298: Mormonism.

The fact emerged out of a shift between one such way of viewing the world and another. It was a shift toward intense interest in highly specific and mundane experiences, a shift that baffled those who did not speak the new language of knowledge. The early modern astronomer and fact-enthusiast Johannes Kepler compared his work to a hen hunting for grains in dung. It took a century for those grains to accumulate to a respectable haul; until then, they looked and smelled to skeptics like excrement.

The first thing to understand about the fact is that it is not found in nature. The portable, validated knowledge-object that one can simply invoke as a given truth was a creation of the seventeenth century: Before, we had neither the underlying concept nor a word for it. Describing this shift in the 2015 book The Invention of Science: A New History of the Scientific Revolution, the historian David Wootton quotes Wittgenstein’s Tractatus: “‘The world is the totality of facts, not of things.’ There is no translation for this in classical Latin or Elizabethan English.” Before the invention of the fact, one might refer, on the one hand, to things that exist — in Latin res ipsa loquitur, “the thing speaks for itself,” and in Greek to hoti, “that which is” — or, on the other hand, to experiences, observations, and phenomena. The fact, from the Latin verb facere, meaning to do or make, is something different: As we will come to understand, a fact is an action, a deed.

How did you know anything before the fact? There were a few ways.

You could of course know things from experience, yours or others’. But even the most learned gatherers of worldly knowledge — think Herodotus, Galen, or Marco Polo — could, at best, only carefully pile up tidbits of reported experience from many different cultural and natural contexts. You were severely constrained by your own personal experiences and had to rely on reports the truthfulness of which was often impossible to check. And so disagreements and puzzling errors contributed to substantial differences of expert opinion.

For most people, particularly if you couldn’t read, what you experienced was simply determined by your own cultural context and place. What could be known in any field of human activity, whether sailing in Portugal or growing silkworms in China, was simply what worked — the kind of knowledge discovered only through painful trial and error. Anything beyond that was accepted on faith or delegated to the realm of philosophical and religious speculation.

Given the limitations of earthly human experience, philosophers and theologians looked higher for truth. This earthly vale of tears was churning, inconstant, corruptible, slippery. And so, theoretical knowledge of unreachable heights was held to be both truer and nobler than experiential knowledge.

If you had a thorny question about God or the natural order that you could not answer by abstract reasoning, you had another path of knowledge available: the authority of scripture or the ancients, especially Aristotle — “the Philosopher,” as he was commonly cited. Questions such as those about the nature of angels or whether the equator had a climate suitable for human habitation were addressed by referring to various philosophical arguments. And so you had the curious case of urbane and learned men believing things that illiterate craftsmen would have known to be false, for instance that garlic juice would deactivate a magnet. Sailors, being both users of magnets and lovers of garlic, must surely have known that Plutarch’s opinion on this matter — part of a grander theory of antipathy and attraction — was hogwash. But rare was the sailor who could read and write, and anyway, who are you going to believe, the great philosopher of antiquity or some unlettered sea dog?

These ways of knowing all had something in common: to know something was to be able to fit it into the world’s underlying order. Because this order was more fundamental and, in some sense, more true than any particular experience, the various categories, measurements, and models that accounted for experience tended to expand or contract to accommodate the underlying order. Numbers in scholarly works might be rounded to a nearby digit of numerological significance. Prior to the fourteenth century, the number of hours of daylight remained fixed throughout the year, the units themselves lengthening or shortening depending on the rising and setting of the sun.

In Europe before the fact, knowing felt like fitting something — an observation, a concept, a precept — into a bigger design, and the better it fit and the more it resonated symbolically, the truer it felt.

An English yeoman farmer rubs his eyes to the sound of the cock crowing. The sun’s coming up, the hour of Prime. “Gloria Patri, et Filio, et Spiritui Sancto … ” It’s cold outside, and the frost dusts the grass, but the icy film over the water trough breaks easily. The early days of Advent belie the deep winter to come after Christmas.

He trundles to the mill with a sack of grain to make some flour for the week’s bread. His wife, who is from a nearby estate, says that something about their village’s millstone mucks up the flour. He thinks she’s just avoiding a bit of extra kneading. The news on everyone’s lips is of an unknown new ague afflicting another town in the county. Already seven souls, young and old, men and women, had been struck, and two, an older widow and the village blacksmith, had departed this world. It was said that a hot poultice of linseed meal and mustard, applied to the throat, had cured two or three. He resolves to get some mustard seeds from his cellar that very afternoon.

The Aristotelian philosophy is inept for New discoveries…. And that there is an America of secrets, and unknown Peru of Nature, whose discovery would richly advance [all Arts and Professions], is more than conjecture.

— Joseph Glanvill, The Vanity of Dogmatizing (1661)

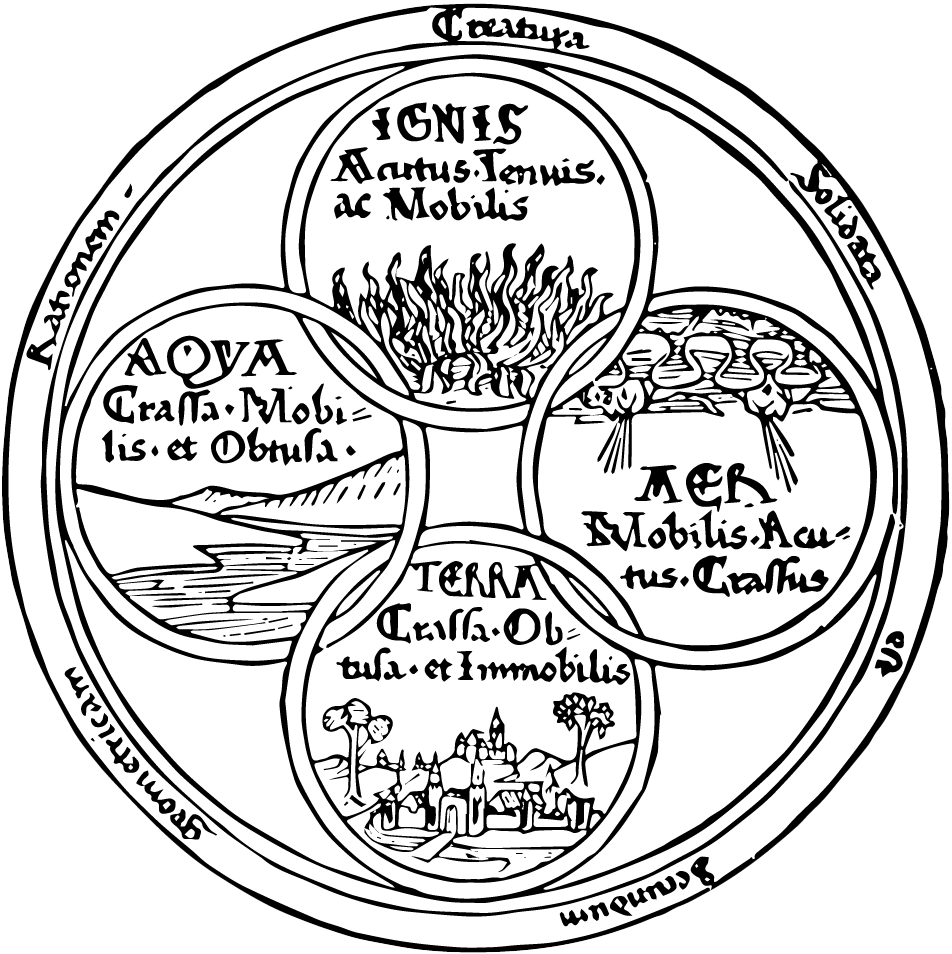

Between the 1200s and the 1500s, European society underwent a revolution. During this time, what the French historian Jacques Le Goff called an “atmosphere of calculation” was beginning to suffuse the market towns, monasteries, harbors, and universities. According to Alfred Crosby’s magnificent book The Measure of Reality, the sources and effects of this transformation were numerous. The adoption of clocks and the quantification of time gave the complex Christian liturgy of prayers and calendars of saints a mechanical order. The prominence and density of merchants and burghers in free cities boosted the status of arithmetic and record-keeping, for “every saleable item is at the same time a measured item,” as one medieval philosopher put it. Even the new polyphonic music required a visual notation and a precise, metrical view of time. That a new era had begun in which knowledge might be a quantity, something to be piled up, and not a quality to be discoursed upon, can be seen by the 1500s in developments like Tycho Brahe’s tables of astronomical data and Thomas Tallis’s forty-part composition Spem in alium, soon to be followed by tables, charts, and almanacs of all kinds.

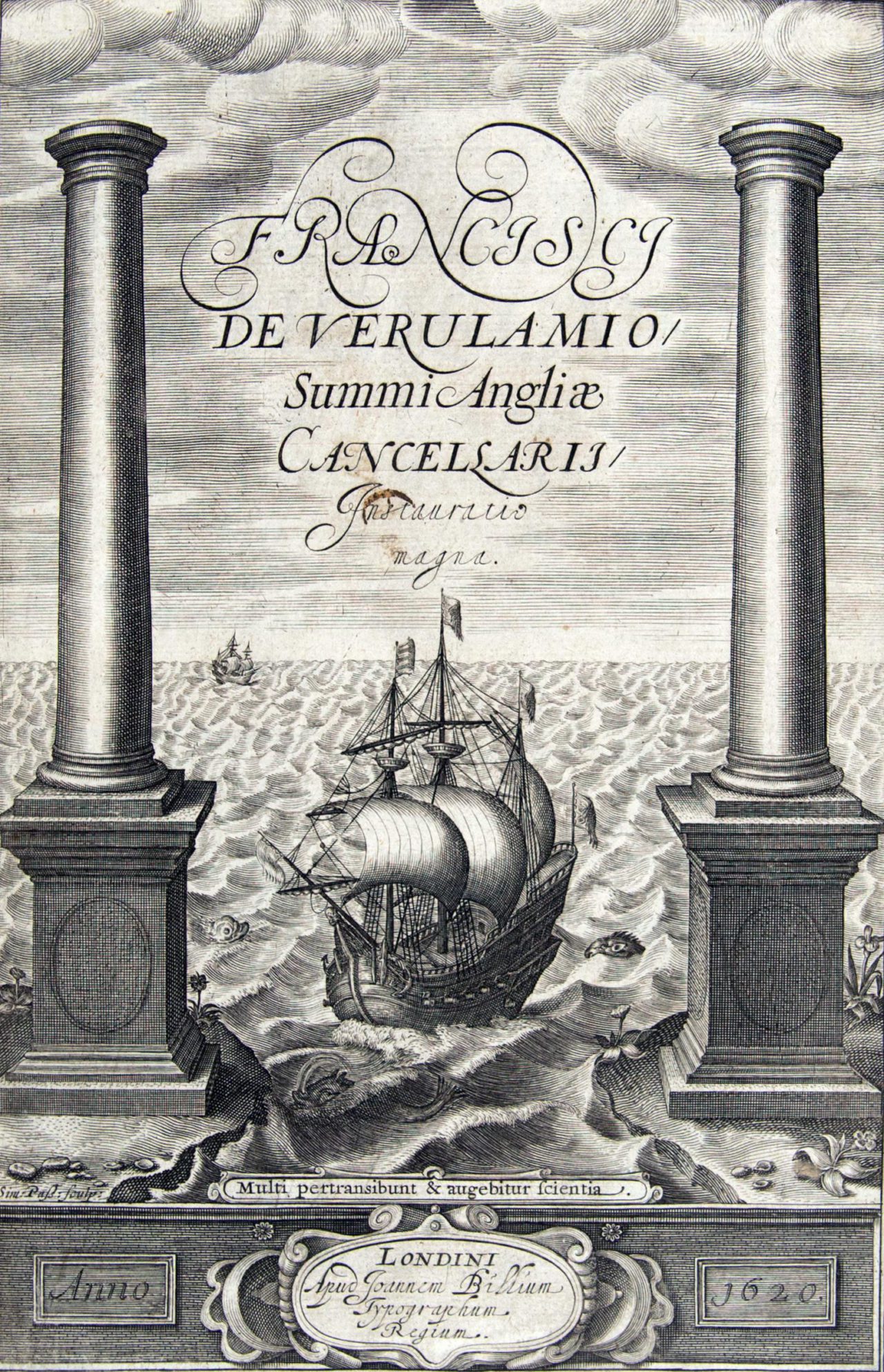

Also in the 1500s, Europeans came to understand that what their voyagers had been poking at was not an island chain or a strange bit of Asia but a new continent, a new hemisphere, really. The feeling that mankind had moved beyond the limits of ancient knowledge can be seen in the frontispiece for Bacon’s Novum Organum (1620), an etching of a galleon sailing between the Pillars of Hercules toward the New World. The New Star of 1572, which Brahe proved to be beyond the Moon and thus in the celestial sphere — previously thought to be immutable — woke up the astronomers. And the invention of movable type helped the followers of Martin Luther to replace the authority of antiquity, and of antiquity’s latter-day defenders, the Roman Papacy, with the printed text itself.

But if there were serious cracks in what Crosby calls the “venerable model” of European knowledge, what was it about the fact that seemed like the solution? The problem with medieval philosophy, according to Francis Bacon, was that philosophers, so literate in the book of God’s Word, failed to pay sufficient attention to the book of God’s works, the book of nature. And the few who bothered to read the book of nature were “like impatient horses champing at the bit,” charging off into grand theories before making careful experiments. What they should have done was to actually check systematically whether garlic deactivated magnets, or whether the bowels of a freshly killed she-goat, dung included, were a successful antivenom.

What then, exactly, is a fact? As we typically think of it today, we don’t construct, produce, generate, make, or decree facts. We establish them. But there’s a curious ambiguity deep in our history between the thing-ness of the fact and the social procedure for transforming an experience into a fact. Consider the early, pre-scientific usage of “fact” in the English common law tradition, where “the facts of the case” were decided by the jury. Whatever the accused person actually did was perhaps knowable only to God, but the facts were the deeds as established by the jury, held to be immutably true for legal purposes. The modern fact is what you get when you put experience on trial. And the fact-making deed is the social process that converts an experience into a thing that can be ported from one place to another, put on a bookshelf, neatly aggregated or re-arranged with others.

By the seventeenth century, the shift had become explicit. In place of the old model’s inconstancy, philosophers and scientists such as Francis Bacon and René Descartes emphasized methodical inquiry and the clear publication of results. Groups of likeminded experimenters founded institutions like the Royal Society in England and the Académie des Science in France, which established standards for new techniques, measuring tools, units, and the like. Facts, as far as the new science was concerned, were established not by observation or argument but by procedure.

One goal of procedure was to isolate the natural phenomenon under study from variations in local context and personal experience. Another goal was to make the phenomenon into something public. Strange as it is for us to recognize today, the fact was a social thing: what validated it was that it could be demonstrated to others, or replicated by them, according to an agreed-upon procedure. This shift is summed up in the motto of the Royal Society: Nullius in verba, “take no one’s word for it.”

The other important feature of facts is that they stand apart from theories about what they mean. The Royal Society demanded of experimental reports that “the matter of fact shall be barely stated … and entered so in the Register-book, by order of the Society.” Scientists could suggest “conjectures” as to the cause of the phenomena, but these would be entered into the register book separately. Whereas in the “venerable model,” scientists sought to fit experiences and observations into bigger theories, the new scientists kept fact and interpretive theories firmly apart. Theories and interpretations remained amorphous, hotly contested, tremulous; facts seemed like they stood alone, solid and sure.

The fact had another benefit. In a Europe thoroughly exhausted by religious and political arguments, the fact seemed to stand coolly apart from this too. Founded in the wake of the English Civil War, the Royal Society did not require full agreement on matters of religion or politics. A science conducted with regard to careful descriptions of matters of fact seemed a promising way forward.

The fact would never have conquered European science, much less the globe, if all it had going for it was a new attitude toward knowledge. Certainly it would never have become something everyday people were concerned with. David Wootton writes: “Before the Scientific Revolution facts were few and far between: they were handmade, bespoke rather than mass-produced, they were poorly distributed, they were often unreliable.” For decades after first appearing, facts yielded few definitive insights and disproved few of the reigning theories. The experimental method was often unwieldy and poorly executed, characterized by false starts and dead ends. The ultimate victory of the fact owes as much to the shifting economic and social landscape of early modern Europe as to its validity as a way of doing science.

Facts must be produced. And, just like in any industry, growing the fact industry’s efficiency, investment, and demand will increase supply while reducing cost. In this sense, the Scientific Revolution was an industrial revolution as much as a conceptual one.

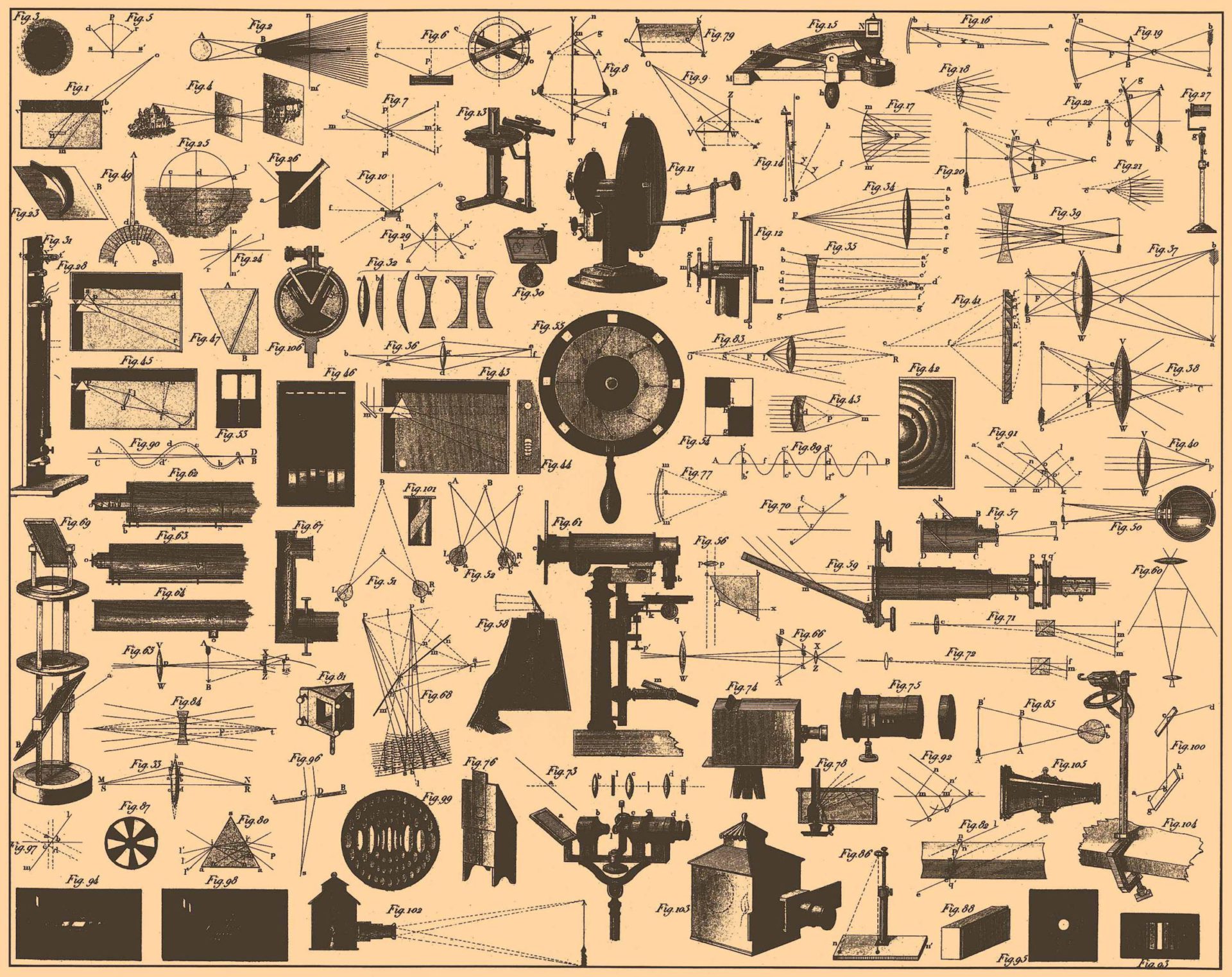

For example, the discovery of the Americas and the blossoming East Indies trade led to a maritime boom — which in turn created a powerful new demand for telescopes, compasses, astrolabes, and other navigational equipment — which in turn made it cheaper for astronomers, mathematicians, and physicists to do their work, and funded a new class of skilled artisans who built not only the telescopes but a broad set of commercial and scientific equipment — all of which meant a boom in several industries of fact-production.

But more than anything, it was the movable-type printing press that made the fact possible. What distinguishes alchemy from science is not the method but the shifting social surround: from guilds trading secret handwritten manuscripts to a civil society printing public books. The fact, like the book, is a form of what the French philosopher Bruno Latour calls an “immutable mobile” — a knowledge-thing that one can move, aggregate, and build upon without changing it in substance. The fact and the book are inextricably linked. The printed word is a “mechanism … to irreversibly capture accuracy.”

It was this immutability of printed books that made them so important to the establishment of facts. Handwritten manuscripts had been inconsistent and subject to constant revision. Any kind of technical information was notoriously unreliable: Copyists had a hard enough time maintaining accuracy for texts whose meaning they understood, much less for the impenetrable ideas of experimenters. But for science to function, the details mattered. Printing allowed scientists to share technical schema, tables of data, experiment designs, and inventions far more easily, durably, and accurately, and with illustrative drawings to boot.

The fact is a universalist ideal: it seems to achieve something independent of time and place. And yet the ideal itself arose from local factors, quirks of history, and happy turns of fate. It needed a specific social, economic, and technological setting to flourish and make sense. For example, to do lab work, scientists had to be able to see — but London gets as few as eight hours of daylight in winter. That roadblock was cleared by the Argand oil lamp. Relying on the principles of Antoine Lavoisier’s oxygen experiments, and developed in the late 1700s by one of his students, it was the first major lighting invention in two thousand years. It provided the light of ten candles, the perfect instrument for working, reading, and conducting scientific experiments after dark. Replace the glass chimney with copper, put it under a beaker stand, and the lamp’s clean, steady, controllable burner was also perfect for laboratory chemistry. With the arrival of the Argand lamp, clean, constant light replaced flickering candlelight at any hour of night or day, while precise heat for chemical experiments was merely a twist of the knob away.

What took place with books and lamps happened in many other areas of life: the replacement of variable, inconstant, and local knowledge with steady, replicable, and universal knowledge. The attractiveness of the modern model of facts was that anyone, following the proper method and with readily available tools, could replicate them. Rather than arguing about different descriptions of hotness, you could follow a procedure, step by step from a book, to precisely measure what was newly called “temperature,” and anyone else who did so would get the same result. The victory of this approach over time was not so much the product of a philosophical shift as a shift of social and economic reality: Superior production techniques for laboratory equipment and materials and the spread of scientific societies — including through better postal services and translations of scientific manuscripts — improved the quality of experimentation and knowledge of scientific techniques.

The belief that The Truth is easily accessible if we all agree to some basic procedures came to be understood as a universal truth of humankind — but really what happened is that a specific strand of European civilization became globalized.

If the seventeenth century was when facts were invented, the coffeehouse was where they first took hold. The central feature of the European coffeehouse, besides java imported from the colonies, was communal tables stacked with pamphlets and newspapers providing the latest news and editorial opinion. The combination of news, fresh political tracts, and caffeine led to long conversations in which new ideas could be hashed out and the contours of consensus reached — a process that was aided by the self-selection of interested parties into different coffeehouses. In the Europe of the old venerable model, learning looked like large manuscripts, carefully stewarded over the eons, sometimes literally chained to desks, muttered aloud by monks. In the Europe of the new science, learning looked like stacks of newspapers, journals, and pamphlets, read silently by gentlemen, or boisterously for effect.

The same conditions that revolutionized the production of scientific facts also transformed the creation of all kinds of other facts. The Enlightenment of the Argand lamp was also an awakening from the slumber of superstition and authority stimulated by caffeine. Charles II felt threatened enough to ban coffeehouses in 1675, fearing “that in such Houses, and by occasion of the meetings of such persons therein, divers False, Malitious and Scandalous Reports are devised and spread abroad, to the Defamation of His Majesties Government, and to the Disturbance of the Peace and Quiet of the Realm.”

Coffeehouse culture and the “immutable mobile” of the printed word enabled a variety of ideas adjacent to the realm of the scientific fact. Is it a fact, as John Locke wrote, “that creatures belonging to the same species and rank … should also be equal one amongst another”? Or that the merchant sloop Margaret was ransacked off the coast of Anguilla? Or that the world, as The Most Reverend James Ussher calculated, was created on Sunday, October 23, 4004 b.c.?

In the Royal Society’s strict sense, no. But these kinds of claims still rested on a new mode of reasoning that prized clearly stated assumptions, attention to empirical detail, aspirations toward uniformity, and achieving the concurrence of the right sort of men. Repeatable verification processes had meshed with new means for distributing information and calculating consensus, leading simultaneously to revolutions in science, politics, and finance.

Modern political parties emerged out of this same intellectual mélange, and both Lloyd’s of London (the first modern insurance company) and the London Stock Exchange were founded at coffeehouses and at first operated there, providing new venues to rapidly discern prices, exchange information, and make deals. What the fact is to science, the vote is to politics, and the price to economics. All are cut from the same cloth: accessible public verification procedures and the fixity of the printed word.

Now, what I want is, Facts. Teach these boys and girls nothing but Facts. Facts alone are wanted in life. Plant nothing else, and root out everything else. You can only form the minds of reasoning animals upon Facts: nothing else will ever be of any service to them.

— Charles Dickens, Hard Times (1854)

Facts were becoming cheaper to make, and could almost be industrially produced using readily available measurement tools. And because producing facts required procedures that everyone agreed on, it was essential to standardize the measurement tools, along with their units.

When the French mathematician Marin Mersenne sought to replicate Galileo’s experiment on the speed of falling bodies, he faced a puzzle: Just how long was the braccio, or arm’s-length measurement, that Galileo used to report his results, and how could Mersenne get one in Paris? Even after finding a Florentine braccio in Rome, Mersenne still couldn’t be sure, since Galileo may have used a slightly different one made in Venice.

It took until the very end of the 1700s for an earnest attempt at universal standardization to begin, with the creation of the metric system. Later, in the 1800s, a body of international scientific committees was established to oversee the promulgation of universal standards. Starting from the most obvious measurements of distance, mass, and time, they eventually settled on seven fundamental units for measuring all the physical phenomena in the universe.

Standardization was by no means limited to science. It conquered almost every field of human activity, transforming what had been observations, intuitions, practices, and norms (the pinch of salt) into universal, immutable mobiles (the teaspoon). You began to be able to trust that screws made in one factory would fit nuts made in another, that a clock in the village would tell the same time as one in the city. As facts became more standardized and cheaper, you began to be able to expect people to know certain facts about themselves, their activities, and the world. It was not until the ascendency of the fact — and related things, like paperwork — that it was common to know one’s exact birthday. Today, the International Organization for Standardization (better known by its multilingually standardized acronym, ISO) promulgates over 24,000 standards for everything from calibrating thermometers to the sizes and shapes of wine glasses.

But although the forces of standardization were making facts cheaper and easier to produce, they remained far from free. The specialized instruments necessary to produce these facts — and the trained and trusted measurers — were still scarce. Facts might be made at industrial scale, but they were still made by men, and usually “the right sort of men,” and thus directed at the most important purposes: industry, science, commerce, and government.

At the same time, the facts these institutions demanded were becoming increasingly complex, specialized, and derivative of other facts. A mining company might record not just how much coal a particular mine could produce, but also the coal’s chemical composition and its expected geological distribution. An accounting firm might calculate not just basic profits and losses, but asset depreciation and currency fluctuations. Our aspirations for an ever-more-complete account of the world kept up with our growing efficiency at producing facts.

The cost of such facts was coming down, but it remained high enough to have a number of important implications. In many cases, verification procedures were complex enough that while fellow professionals could audit a fact, the process was opaque to the layman. The expense and difficulty of generating facts, and the victories that the right facts could deliver — in business, science, or politics — thus led to new industries for generating and auditing facts.

This was the golden age of almanacs, encyclopedias, trade journals, and statistical compendia. European fiction of the 1800s is likewise replete with the character — the German scholar, the Russian anarchist, the English beancounter — who refuses to believe anything that does not come before him in the form of an established scientific fact or a mathematical proof. Novelists, in critiquing this tendency, could expect their readers to be familiar with such fact-mongers because they had proliferated in Europe by this time.

The wealth and status accruing to fact generation stimulated the development of professional norms and virtues not only for scientists but also bankers, accountants, journalists, spies, doctors, lawyers, and dozens of other newly professionalizing trades. You needed professionals you could trust, who agreed on the same procedures, kept the same kinds of complex records, and could continue to generate reliable facts. And though raw data may have been publicly available in principle, the difficulty of accessing and making sense of it left its interpretation to the experts, with laymen relying on more accessible summations. Because the facts tended to pile up in the same places, and to be piled up by professionals who shared interests and outlook, reality appeared to cohere together. In the age of the fact, knowing something felt like establishing it as true with your own two eyes, or referencing an authoritative expert who you could trust had a command of the facts.

Even in the twentieth century, with the growing power of radio and television for entertainment and sharing information, print continued to reign as the ultimate source of authority. As it had since the beginning, something about the letters sitting there in stark immutable relief bore a spiritual connection to the transcendent authority of the fact established by valid procedure. The venerable Encyclopedia Britannica was first printed in three volumes in 1771. It grew in both size and popularity, peaking in 1990 with the thirty-two-volume 15th edition, selling 117,000 sets in the United States alone, with revenues of $650 million. In 2010, it would go out of print.

A junior clerk at an insurance firm wakes to the ringing of his alarm clock. It’s a cold morning, according to the mercury, about 25 degrees. After a cup of Folgers coffee from the percolator, he commutes to work on his usual tram (#31–Downtown), picking up the morning edition of the paper along the way.

After punching in on the timeclock, he sits down at his appointed station. Amid the usual forms that the file clerk has dropped off for his daily work, he has on his desk a special request memo from his boss, asking him to produce figures on life expectancy for some actuarial table the firm requires.

He retrieves from inside his desk a copy of The World Almanac and Book of Facts (AY67.N5 W7 in the Library of Congress catalog system). After flipping through dozens of pages of advertisements, he finds the tables of mortality statistics and begins to make the required calculations. Strange how, for the common fellow, the dividing line between an answer easy to ascertain and one nearly impossible to arrive at was merely whether it was printed in The World Almanac.

Sometime in the last fifty years, the realm of facts grew so much that it became bigger than reality itself — the map became larger than the territory. We now have facts upon facts, facts about facts, facts that bring with them a detailed history of all past permutations of said fact and all possible future simulated values, rank-ordered by probability. Facts have progressed from medieval scarcity to modern abundance to contemporary superabundance.

Who could look at the 15th edition of the Encyclopedia Britannica, with 410,000 entries across thirty-two volumes, and not see abundance? At about $1,500 a set, that’s only a third of a penny per entry! What could be cheaper? For starters, Microsoft’s Encarta software, which, while not as good-looking on the shelf, cost only a fifth of a penny per entry at its final edition in 2009 — the whole bundle was priced at just $30. But cheaper still is Wikipedia, which currently has 6,635,949 English-language entries at a cost per entry of absolutely nothing. Abundance is a third of a penny per entry. Superabundance is fifteen times as many entries for free.

The concept of superabundance owes especially to the work of media theorist L. M. Sacasas. In an essay on the transformative power of digital technology published in these pages, he writes that “super-abundance … encourages the view that truth isn’t real: Whatever view you want to validate, you’ll find facts to support it. All information is also now potentially disinformation.” Sacasas continues to investigate the phenomenon in his newsletter, The Convivial Society.

What makes superabundance possible is the increasing automation of fact production. Modern fact-making processes — the scientific method, actuarial sciences, process engineering, and so forth — still depended on skilled people well into the twentieth century. Even where a computer like an IBM 360 Mainframe might be doing the tabulating, the work of both generating inputs and checking the result would often be done by hand.

But we have now largely uploaded fact production into cybernetic systems of sensors, algorithms, databases, and feeds. The agreed-upon social procedures for fact generation of almost every kind have been programmed into these sensor–computer networks, which then produce those facts on everything from weather patterns to financial trends to sports statistics programmatically, freeing up skilled workers for other tasks.

For instance, most people today are unlikely to encounter an analog thermometer outside of a school science experiment — much less build such an instrument themselves. Mostly, we think of temperature as something a computer records and provides to us on a phone, the TV, or a car dashboard. The National Weather Service and other similar institutions produce highly accurate readings from networks of tens of thousands of weather stations, which are no longer buildings with human operators but miniaturized instruments automatically transmitting their readings over radio and the Internet.

The weather app Dark Sky took the automation of data interpretation even further. Based on the user’s real-time location, the app pulled radar images of nearby clouds and extrapolated where those clouds would be in the next hour or so to provide a personalized weather forecast. Unlike a human meteorologist, Dark Sky did not attempt to “understand” weather patterns: it didn’t look at underlying factors of air temperature and pressure or humidity. But with its access to location data and local radar imagery, and with a sleek user interface, it proved a popular, if not entirely reliable, tool for the database age.

Even in the experimental sciences, where facts remain relatively costly, they are increasingly generated automatically by digital instruments and not from the procedures carried out directly by scientists. For example, when scientists were deciphering the structure of DNA in the 1950s, they needed to carefully develop X-ray photos of crystallized DNA and then ponder what these blurry images might tell them about the shape and function of the molecule. But by the time of the human genome project in the 1990s, scientists were digitally assembling short pieces of DNA into a complete sequence for each chromosome, relying on automated computer algorithms to puzzle out how it all fit together.

In 1986, Bruno Latour still recorded that almost every scientific instrument of whatever size and complexity resulted in some sign or graph being inscribed on paper. No longer.

Few things feel more immutable or fixed than a ball of cold, solid steel. But if you have a million of them, a strange thing happens: they will behave like a fluid, sloshing this way and that, sliding underfoot, unpredictable. In the same way and for the same reason, having a small number of facts feels like certainty and understanding; having a million feels like uncertainty and befuddlement. The facts don’t lie, but data sure does.

The shift from printed facts (abundance) to computerized data (superabundance) has completely upended the economics of knowledge. As the supply of facts approaches infinity, information becomes too cheap to value.

What facts are you willing to pay for today? When was the last time you bought an almanac or a reference work?

And what is it that those at the cutting edge of data — climate scientists, particle physicists, hedge fund managers, analysts — sell? It isn’t the facts themselves. It isn’t really the data itself, the full collection of superabundant facts, though they might sell access to the whole as a commodity. Where these professionals really generate value today is in selling narratives built from the data, interpretations and theories bolstered by evidence, especially those with predictive value about the future. The generation of facts in accordance with professional standards has increasingly been automated and outsourced; it is, in any case, no longer a matter of elite concern. Status and wealth now accrue to those “symbolic analysts” who can summon the most powerful or profitable narratives from the database.

L.M. Sacasas explains the transition from the dominance of the consensus narrative to that of the database in his essay “Narrative Collapse.” When facts were scarce, scientists, journalists, and other professionals fought over the single dominant interpretation. When both generating new facts and recalling them were relatively costly, this dominant narrative held a powerful sway. Fact-generating professionals piled up facts in support of a limited number of stories about the underlying reality. A unified sense of the world was a byproduct of the scarcity of resources for generating new knowledge and telling different stories.

But in a world of superabundant, readily recalled facts, generating the umpteenth fact rarely gets you much. More valuable is skill in rapidly re-aligning facts and assimilating new information into ever-changing stories. Professionals create value by generating, defending, and extending compelling pathways through the database of facts: media narratives, scientific theories, financial predictions, tax law interpretations, and so forth. The collapse of any particular narrative due to new information only marginally reshapes the database of all possible narratives.

Consider this change at the level of the high school research paper. In 1990, a student tasked with writing a research paper would sidle up to the school librarian’s desk or open the card catalog to figure out what sources were available on her chosen subject. An especially enterprising and dedicated student, dissatisfied with the offerings available in the school’s library, might decamp to her city’s library or maybe even a local university’s. For most purposes and for the vast majority of people, the universe of the knowable was whatever could be found on the shelves of the local library.

The student would have chosen her subject, and maybe she would already have definite opinions about the factors endangering the giant panda or the problem of child labor in Indonesian shoe factories. But the evidence she could marshal would be greatly constrained by what was available to her. Because the most mainstream and generally accepted sources were vastly more likely to be represented at her library, the evidence she had at hand would most likely reflect expert consensus and mainstream beliefs about the subject. It wasn’t a conspiracy that alternative sources, maverick thinkers, or outsider researchers were nowhere to be found, but it also wasn’t a coincidence. Just by shooting for a solid B+, the student would probably produce a paper with more-or-less reputable sources, reflective of the mainstream debate about the subject.

Today, that same student would take a vastly different approach. For starters, it wouldn’t begin in the library. On her computer or on her phone, the student would google (or search TikTok) and browse Wikipedia for a preliminary sketch of the subject. Intent to use only the best sources, she would stuff her paper with argument-supporting citations found via Google Scholar, Web of Science, LexisNexis, JSTOR, HathiTrust, Archive.org, and other databases that put all of human knowledge at her fingertips.

Thirty years ago, writing a good research paper meant assembling a cohesive argument from the available facts. Today, it means assembling the facts from a substantially larger pool to fit the desired narrative. It isn’t just indifferent students who do this, of course. Anyone who works with data knows the temptation, or sometimes the necessity, of squeezing every bit of good news out of an Excel spreadsheet, web traffic report, or regression analysis.

After scrolling through Twitter, you get back to your morning’s work: preparing a PowerPoint presentation on the growth of a new product for your boss. You play around with OpenAI’s DALL·E 2 to create the perfect clip art for the project and start crunching the numbers. You don’t need to find them yourself — you have an analytics dashboard that some data scientist put together. Your job is to create the most compelling visualization of steady growth. There has been growth, but it hasn’t really been all that steady or dramatic. But if you choose the right parameters from a menu of thousands — the right metric, the right timeframe, the right kind of graph — you can present the data in the best possible light. On to the next slide.

You decide to go out for lunch and try someplace new. You pull up Yelp and scroll through the dozens of restaurant options within a short walking distance. You aimlessly check out a classic diner, a tapas restaurant, and a new Thai place. It’s just lunch, but it also feels like you shouldn’t miss out on the chance to make the most of it. You sort of feel like a burger, but the diner doesn’t make it on any “best of” burger lists (you quickly checked). Do you pick the good-but-not-special burger from the diner or the Michelin Guide–recommended Thai place that you don’t really feel like? Whatever you choose, you know there will be a pang of FOMO: Fear Of Missing Out, the price you pay for having a world of lunch-related data at your fingertips but only stomach enough for one.

Being limited to a smaller set of facts used to also require something else: trust in the institutions and experts that credentialed the facts. This trust rested on the belief that they were faithful stewards on behalf of the public, carrying out those social verification procedures so that you did not need to.

If you refused to trust the authority and judgment of the New Yorker, the New England Journal of Medicine, or Harvard University Press, then you weren’t going to be able to write your paper. And whatever ideas or thinkers did not meet their editorial judgments or fact-checking standards would simply never appear in your field of view. There’s a reason why anti-institutional conspiratorializing — rejecting the government, the media, The Man — was a kind of vague vibes-based paranoia. You could reject those authorities, but the tradeoff was that this cast your arguments adrift on the vast sea of feeling, intuition, personal experience, and hearsay.

And so, the automatic digital production of superabundant data also led to the apparent liberation of facts from the authorities that had previously generated and verified them. Produced automatically by computers, the data seem to stand apart from the messy social process that once gave them authority. Institutions, expertise, the scientific process, trust, authority, verification — all sink into the invisible background, and the facts seem readily available for application in diverging realities. The computer will keep giving you the data, and the data will keep seeming true and useful, even if you have no understanding of or faith in the underlying theory. As rain falls on both the just and the unjust, so does an iPhone’s GPS navigate equally well for NASA physicists and for Flat Earthers.

Holding data apart from their institutional context, you can manipulate them in any way you want. If the fact is what you get when you put experience on trial, data is what lets you stand in for the jury: re-examining the evidence, re-playing the tape, reading the transcripts, coming to your own conclusions. No longer does accessing the facts require navigating an institution — a court clerk, a prosecutor, a police department — that might put them in context: you can find it all on the Internet. Part of the explosion in interest in the True Crime genre is the fact that superfans can easily play along at home.

At the same time, we have become positively suspicious anytime an institution asks us to rely on its old-fashioned authority or its adherence to the proper verification procedure. It seems paltry and readily manipulated, compared to the independent testimony of an automated recording. As body cameras have proliferated, we’ve become suspicious — perhaps justifiably — of police testimony whenever footage is absent or unreleased. We take a friend’s report on a new restaurant seriously, but we still check Yelp. We have taken nullius in verba — “take no one’s word for it” — to unimaginable levels: We no longer even trust our own senses and memories if we can’t back them up with computer recall.

What is data good for? It isn’t just an arms race of facts — “let me just check one more review.” The fact gives you a knowledge-thing that you can take to the bank. But it can’t go any further than that. If you have enough facts, you can try to build and test a theory to explain and understand the world, and hopefully also make some useful predictions. But if you have a metric ton of facts, and a computer powerful enough to sort through them, you can skip explaining the world with theories and go straight to predictions. Statistical techniques can find patterns and make predictions right off the data — no need for humans to come up with hypotheses to test or theories to understand. If facts unlock the secrets of nature, data unlocks the future.

Instead of laboriously constructing theories out of hard-won facts, analysts with access to vast troves of data can now conduct simulations of all possible futures. Tinker with a few variables and you can figure out which outcomes are the most likely under a variety of circumstances. Given enough data, you can model anything.

But this power — to explore not just reality as it is, but all the realities that might be — has brought about a new danger. If the temptation of the age of facts was to believe that the only things one could know were those that procedural reason or science validated, the temptation of the age of data is to believe that any coherent narrative path that can be charted through the data has a claim to truth, that alternative facts permit alternate realities.

Decades before the superabundance of facts swept over politics and media, it began to revolutionize finance in the 1980s. Deregulation of markets and the adoption of information technology led to a glut of data for financial institutions to sift through and find the most profitable narratives. Computers could handle making trades, evaluating loans, or collating financial data; the value of skill in these social fact-generating procedures plummeted, while the value of profit-maximizing, creative, and complex strategies built upon these automated systems skyrocketed. Tell the right stories of discounted future cash flows and risk levels and sell these stories to markets, investors, and regulators, and you could turn the straw of accounting and market data into gold. In the world of data, you let the computers write the balance sheet, while you figure out how to structure millions of transactions between corporate entities so they tell a story of laptops designed in California, assembled in Taiwan, and sold in Canada that somehow generated all their profits in low-tax Ireland. One result of this transformation has been the steady destruction of professional norms of fidelity for corporate lawyers, investment bankers, tax accountants, and credit-rating agencies.

Consider hedge fund manager Michael Burry’s process for setting up what has come to be known as “The Big Short” of the American housing market in the mid-2000s, the title of a famous book and film about the housing market crash. As Burry became interested in subprime mortgages, he began reading prospectuses for a common financial instrument in the industry: collateralized debt obligations, which in this case were bundles of mortgages sold as investments. Supposedly, these CDOs would sort, rank, and splice together thousands of mortgages to create a financial instrument that was less risky than the underlying loans. Despite a market for these securities worth trillions of dollars, Burry was almost certainly the first human being (other than the lawyers who wrote them) to actually read and contemplate the documents that explained the alchemy by which these financial instruments transmuted high-risk mortgages into stable, reliable investments. Every person involved in producing a particular CDO was outsourcing critical inquiry to algorithms, from the loan officers writing particular mortgages, to the ratings-agency analyst scoring the overall CDO, to the bankers buying the completed mortgage-backed security.

At every stage, the humans involved outsourced a question of reality to automated, computerized procedure. The temptation of the age of data, again, is to believe that any coherent narrative path that can be charted through the data has a claim to truth. Burry made billions of dollars by finding a story that everyone believed, where all the procedures had been followed, all the facts piled up, that seemed real — but wasn’t.

But years before the Global Financial Crisis of 2007–2008, another scandal illustrated in almost every detail the power of data and the allure of simulated realities. The scandal took down a company declared by Fortune Magazine “The Most Innovative Company in the World” for six years running, until it collapsed in 2001 in one of the biggest bankruptcies in history: Enron.

The Enron story is often remembered as simple fraud: the company made up fake revenue and hid its losses. The real story is more baroque — and more interesting. For one thing, Enron really was an innovative corporation, which is why much of its revenue was big and real. Its innovation was that it figured out how to transform natural gas (and later electricity and broadband) from a sleepy business driven by high capital costs and complicated arrangements of buyers and sellers to liquid markets where buyers and sellers could easily tailor transactions to their needs — and where Enron, acting as the middleman, booked major profits.

Think of a contract as a kind of story made into a fact. It has characters, action, and a setting. “In one month, Jim promises to buy five apples from Bob for a dollar each.” In the world before data, natural gas contracts could tell only a very limited number of stories. Compared to other fuels, you can’t readily store natural gas, and it’s difficult to even leave in the ground once you’ve started building a well. Natural gas is also hard to move around (it’s a flammable gas, after all), and so how much gas would be available where and when were difficult questions to answer. The physical limitations of the market meant that the two main kinds of stories natural gas contracts could tell were either long or short ones: “Bob will buy 10,000 cubic feet of gas every month for two years at $7.75 per thousand cubic feet,” or “Bob will buy 10,000 cubic feet of gas right now at whatever price the market is demanding.”

The underlying problem was that to base these stories on solid facts required answering a lot of complicated questions: Who will produce the gas? Does the producer have sufficient reserves available to meet demand? Who will process the gas, and does the processor have the capacity to do so when the consumer needs it? Who will transport it? Is there enough pipeline capacity to bring gas from producers to processers to consumers? And just who are the consumers, and how much will they need today and in the future? Will consumers reduce demand in the future and switch to buying more oil instead if oil drops in price? Will demand drop if the weather gets warmer than expected?

Think about the kind of fact the market price of gas was. It was a tidy, portable, piece of information containing within itself the needs and demands of gas producers, consumers, and transporters. A social, public process of price discovery tells us that today a cubic foot of gas delivered to your home costs this much. But the certainty and simplicity of the gas fact had a built-in vulnerability: Nobody really knew what the price would be tomorrow, or next month. You cannot, after all, have facts about the future.

Or can you? Commodities traders had long ago invented the “futures contract,” which guaranteed purchase of a commodity at a certain price in the future. This worked great for commodities that were easily stored, transported, or substituted. But natural gas was tricky — storage capacity was limited, so it had to flow in the right amount in the right pipeline, from an active producer. How could you make it behave like grain or oil or another commodity?

Two men at Enron managed to figure it out. Jeffrey Skilling and Rich Kinder both believed in the power of data to break through the chains of the stubborn fact.

Jeffrey Skilling was a brilliant McKinsey partner educated at Harvard Business School. He had been consulting for Enron and thought he had come up with a unique solution to the industry’s problems: the Gas Bank. The vision for the Gas Bank was to use Enron’s position in the pipeline market — and the data it provided on the needs of different kinds of buyers and sellers — to treat gas as a commodity.

Throughout the 1980s, Enron had invested in information technology that allowed it to monitor and control flows across its pipelines and coordinate information across the business. The Gas Bank would allow them to put this data to work. At the level of physical stuff, little would change — gas would flow from producers and storage to customers. But within Enron’s ledgers, it would look completely different. Rather than simply charging a fee for transporting gas from producers to sellers, Enron itself would actually buy gas from producers (who would act like depositors) before selling it to customers (like a bank’s borrowers), making a profit on the difference. Whether it was the customers or the producers who wanted to enter into contracts, and whether long-term or short-term, didn’t matter — as long as Enron’s portfolio was balanced between depositors and creditors, it would all work out.

The old hands at Enron didn’t buy the idea. Their heads full of gas facts, they thought Skilling’s vision was a pipe dream, a typical flight of consultant fancy by someone who didn’t understand the hard constraints they faced. But one person who understood the natural gas industry and Enron’s operations better than anyone else was on board: COO Rich Kinder.

Kinder understood that the problem with the natural gas industry was not ultimately a pipeline problem or an investment problem but a risk problem, which is an information problem. Kinder saw that the Gas Bank would let Enron write contracts that told far more complicated kinds of stories, using computer systems to validate the requisite facts on the fly. For example, a utility might want to lock in a low price for next winter’s gas. This would be a risky bet for Enron, unless the company already knew it had enough unsold gas at a lower price from another contract, in which case it could make a tidy profit selling the future guarantee at no risk to itself.

None of this was illusory. Enron really did solve this thorny information problem, using its combination of market position, size, and data access to create a much more robust natural gas market that was better for everyone. And while the first breakthrough in the Gas Bank — getting gas producers to sign long-term development financing contracts — was just a clever business strategy, it was Enron’s investments in information technology that allowed it to track and manage increasingly complex natural gas contracts and to act as the market maker for the whole industry. Before, the obstinate physicality of natural gas had made it organizationally impossible to write sophisticated contracts for different market actors, as for every contract, reserve, production, cleaning, and pipeline capacity and more had to be recalculated. Computer automation served to ever more free the traders from worrying about these details, making a once expensive cognitive task very cheap. As Bethany McLean and Peter Elkind described it in their book The Smartest Guys in the Room, “Skilling’s innovation had the effect of freeing natural gas from physical qualities, from the constraints of molecules and movement.”

But once Enron transformed the gas market, it faced a choice: was it a gas company with a successful finance and trading arm, or was it a financial company that happened to own pipelines? There was a reason why only Enron could create the Gas Bank — the Wall Street firms that were interested in financializing the natural gas industry did not have the market information or understanding of the nuances of the business to make it work. But once Enron had solved this problem, its profits from trading and hedging on natural gas contracts quickly outstripped its profits from building and running the pipelines and other physical infrastructure through which actual natural gas was delivered.

Kinder and Skilling came to stand for two different sides of this problem. Both men relied on the optimization and risk management made possible only with computerization and data, the new kinds of stories you could tell. But for Kinder, all of this activity was in the service of producing a better gas industry. Skilling had grander ambitions. He saw the actual building and running of pipelines as a bad business to be in. The Gas Bank meant that Enron was moving up the value chain. Enron, Skilling thought, should farm out the low-margin work of running pipelines and instead concentrate on the much more profitable and dynamic financial business. If Enron controlled the underlying contract, it didn’t matter whether it used its own pipelines to transport gas or a competitor’s.

In Jeffrey Skilling’s vision, Enron would move toward an asset-light model, always on the hunt for “value.” It would build out its trading desk, expand the market for financial instruments for gas, pioneer new markets for trading electricity and broadband as commodities, and master the art of project financing for infrastructure development. And it would pay for all of this activity by selling off, wherever it made sense, the underlying physical assets in extracting, moving, and using molecules, whether gas or otherwise.

But the same empire-building logic could be applied within Enron itself. If profit and value emerged not from underlying reality but from information, risk assessment, and financial instruments, well then Enron itself could be commoditized, financialized, derivatized. Under Skilling, first as COO and then CEO, Enron’s primary business was not the production or delivery of energy. Enron was, instead, the world leader in corporate accounting, harnessing its command of data on prices, assets, and revenues — along with loopholes in methods for bookkeeping, regulation, and finance — to paint an appealing picture for investors and regulators about the state of its finances.

Skilling believed that the fundamental value of Enron was the value of its stock, and the value of its stock was the story about the future that the market believed in. Even if the actual gas or oil or electricity that Enron brokered contracts for did not change hands for months or years (or ever!), the profits existed in the real world today, the shadow of the future asserting itself in the present. All the stuff the business did, it did to create the facts needed to tell the best story, to buttress the most profitable future. As long as Wall Street kept buying the story, and as long as Enron could manipulate real-world activity in such a way as to generate the data it needed to maintain its narrative, the value of Enron would rise. Pursuing profit and not being held back by any allegiance to the “real world,” Skilling sought to steer Enron, and the world, into an alternate reality of pure financialization. But gravity re-asserted itself and the company imploded. It went bankrupt in 2001, and Skilling was indicted on multiple counts of fraud and fined millions of dollars.

Rich Kinder had taken another path. Leaving Enron in 1997, he formed a new partnership, Kinder Morgan, arranging with Enron to purchase part of its humdrum old pipeline business. He sought value in the physical world of atoms and molecules, after it had been transformed by data. The new financialized natural gas markets generated nuanced data on where market demand outstripped pipeline capacity. Kinder’s new company used this data to return to the business of using pipelines to deliver gas where it was needed, but now more efficiently than ever.

Kinder Morgan today uses all sorts of advanced technology in its pipeline management and energy services, in a partnership with data analysis pioneer Palantir to integrate data points across its network, but its financial operations are transparent and grounded in the realities of the energy sector. Kinder Morgan relies on the wizardry of data to produce efficiencies and manage a sprawling pipeline system many times larger than what was possible in the age of analog systems, but not to spin up alluring alternative futures. The contrast to Skilling’s Enron was not subtle in the title of a 2003 Kinder Morgan report: “Same Old Boring Stuff: Real Assets, Real Earning, Real Cash.”

At the start of Kinder’s new pipeline company in 1997, few would have guessed that within a decade Kinder Morgan would be worth more than Enron — or that Skilling would end up going to prison while Kinder would become a multibillionaire.

The story of Enron is not only about hubris and accounting fraud, though it is surely about that. It is about whether the superabundance of facts and the control promised by data-driven prediction enables us finally to escape the impositions of material reality. Are you using data to affect some change in the real world, or are you shuffling around piles of facts in support of your preferred stories about the future? Are you more loyal to the world you can hold in your hands or to the beautiful vision on the screen?

What do facts feel like today? The word that was once fixed has become slippery. We are distrustful of the facts because we know there are always more in the database. If we’re not careful, the facts get swapped out under our noses: stealth edits in the historical record, retracted papers vanishing into the ether, new studies disproving the old. The networked computer degrades the fact’s status as an “immutable mobile.”

The difference between honest, data-supported materiality and deceitful, data-backed alternate realities — between Rich Kinder and Jeffrey Skilling — is not a matter of who has the facts. The Skillings of the world will always be able to pull up the requisite facts in the database, right until the end. The difference is instead a matter of who seeks to find the truest path through the data, not the path that is the most persuasive but the one that is the most responsive to reality.

This means that we too have a choice to make. The fact had abolished trust in authority (“take no one’s word for it!”), but the age of the database returns trust in a higher authority to center stage. You’re going to have to trust someone, and you can’t make your way simply by listening to those who claim the power of facts, because everyone does that now.

The temptation will be to listen to the people — the pundits, the politicians, the entrepreneurs — who weave the most appealing story. They may have facts on their side, and the story will be powerful, inspiring, engaging, and profitable. But if it bears no allegiance to reality, at some point the music will stop, the fantasy will burst, and the piper will be paid.

But there will be others who neither adhere to the black-and-white simplicity of the familiar stories nor appeal to our craving for colorful spectacle. Their stories may have flecks of gray and unexpected colors bisecting the familiar battle lines. They may not be telling you all that you want to hear, because the truth is sometimes more boring and sometimes more complicated than we like to imagine. These are the ones who maintain an allegiance to something beyond the narrative sandcastle of superabundant facts. They may be worth following. ♣

← Essay 3. How Jon Stewart Made Tucker Carlson

Essay 5. An America of Secrets →

Exhausted by science and tech debates that go nowhere?